56

10

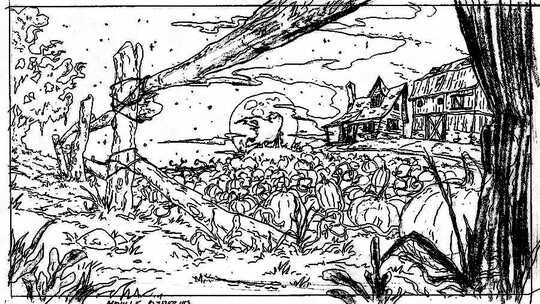

Your task is to create a program which takes a black-and-white outlined image (example images are below) and fills it in with colour. It is up to you how you section off each region and which colour to fill it with (you could even use an RNG).

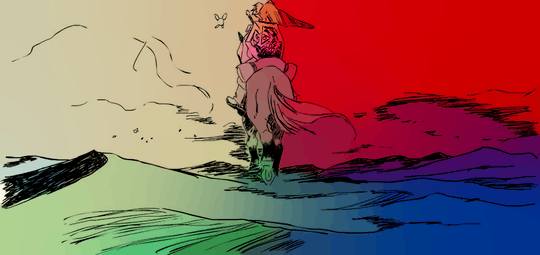

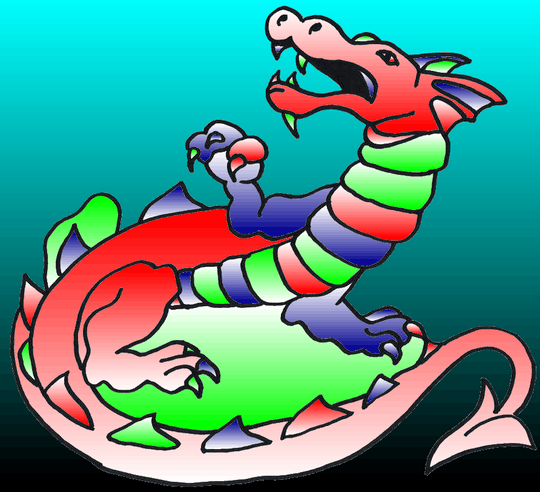

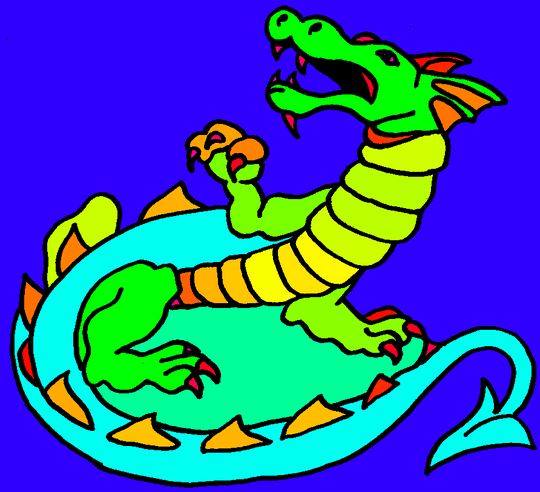

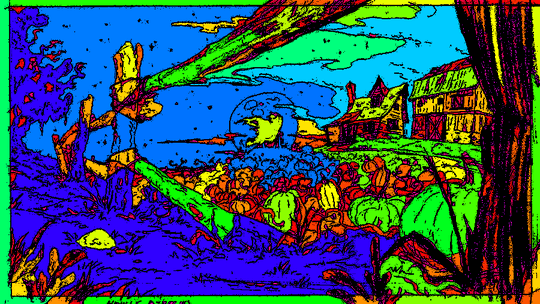

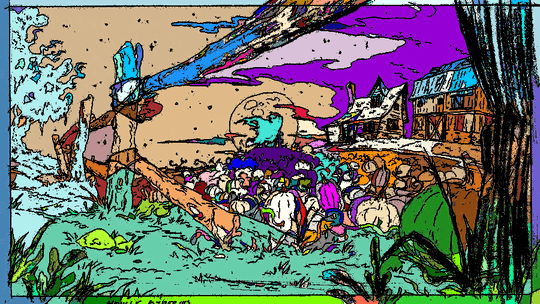

For example:

As you can see I am clearly an artist of a superior calibre when it comes to MS Paint.

Scoring

This is a popularity contest, so the answer with the most net votes wins. Voters are encouraged to judge answers by

- Input criterion: any image that consists of white/light-grey background and black/dark-grey outlines

- How well the colouring is done; meaning few or no areas are white unlike the above (unless you obviously intend to use white e.g. for clouds)

- Customisability of the colours used in certain sections

- How well the system works on a range of different images (of varying detail)

- Post how long your program takes per image. We might not be playing code golf, but shorter, faster and more efficient code should be regarded as better

- Should output the new image either onto the screen or to a file (no larger than 2MB so that it can be shown in the answer)

- Please justify why you chose to output to that image type and comment/explain the workings of your code

- The applicability of the colour used to the respective shape it is bound by (realistic colour scheme i.e. grass is green, wooden fences are brown etc.)

"I could randomly color each area, but if I could identify the "fence" and make it similarly colored, then that's something that deserves upvotes." - NathanMerrill

Seeing as this is a popularity contest, you can also optionally judge by:

- Overall appeal (how good the image looks)

- Artistic flair; if you can program in shading or watercolour-style colouring etc.

In general, the smallest outputted image (file size) of the highest quality, with the fasted program and the highest public vote will win.

If you have other judging specifications that you think should be used, please recommend them in the comments of this post.

Examples

I own nothing; all example images are of a creative commons license.

Source: https://pixabay.com/ro/stejar-arbore-schi%C5%A3%C4%83-natura-303890/

Source: https://pixabay.com/ro/stejar-arbore-schi%C5%A3%C4%83-natura-303890/

Source: http://www.freestockphotos.biz/stockphoto/10665

Source: http://www.freestockphotos.biz/stockphoto/10665

Source: http://crystal-rose1981.deviantart.com/art/Dragon-Tattoo-Outline-167320011

Source: http://crystal-rose1981.deviantart.com/art/Dragon-Tattoo-Outline-167320011

Source: http://jaclynonacloudlines.deviantart.com/art/Gryphon-Lines-PF-273195317

Source: http://jaclynonacloudlines.deviantart.com/art/Gryphon-Lines-PF-273195317

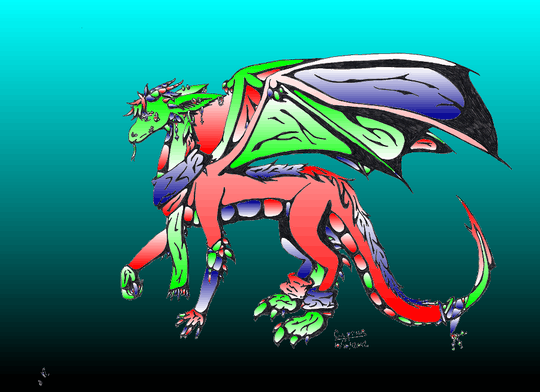

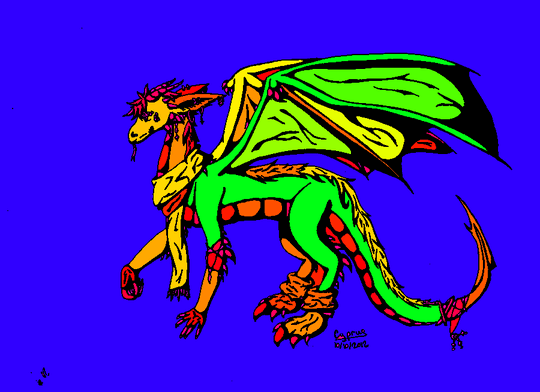

Source: http://captaincyprus.deviantart.com/art/Dragon-OutLine-331748686

Source: http://captaincyprus.deviantart.com/art/Dragon-OutLine-331748686

Source: http://electric-meat.deviantart.com/art/A-Heroes-Farewell-280271639

Source: http://electric-meat.deviantart.com/art/A-Heroes-Farewell-280271639

Source: http://movillefacepalmplz.deviantart.com/art/Background-The-Pumpkin-Farm-of-Good-old-Days-342865938

Source: http://movillefacepalmplz.deviantart.com/art/Background-The-Pumpkin-Farm-of-Good-old-Days-342865938

EDIT: Due to anti-aliasing on lines causing non-black/white pixels and some images that may contain grey instead of black/white, as a bonus challenge you can attempt to deal with it. It should be easy enough in my opinion.

4To everyone: please does not downvote/close this as an "art contest" - there is more to it – edc65 – 2016-01-07T21:41:43.437

16Welcome to PPCG! I applaud you for having the courage to not only have your first post be a challenge, and not only a pop-con challenge, but an artistic challenge on top of it all. Good luck, I wish you the best, and if you stick around I think you'll be going far here. – AdmBorkBork – 2016-01-07T21:46:03.710

@NathanMerrill does that edit suffice? – OliverGriffin – 2016-01-07T22:13:36.987

1@TimmyD thanks for such a warm welcome; a real confidence boost! – OliverGriffin – 2016-01-07T22:13:40.963

4@OliverGriffin I'm voting against closing and also, I've added in the images you linked for you. You can remove the comments, if you wish. – Addison Crump – 2016-01-07T22:33:52.187

2I finally found an approach that probably won't stack overflow, but now it's running kind of slowly. – SuperJedi224 – 2016-01-07T22:37:59.023

@SuperJedi224 "probably" – Addison Crump – 2016-01-07T23:11:03.217

I've voted to close this question because it does not have objective winning criterion. The answer itself cannot be judged by a color scheme or the author's "artistic style", as this is an opinion that varies from person to person. Please consider removing these criterion. I believe that (among other things) would strengthen the question. You may also want to consider clarifying some of the input/output specs. – Zach Gates – 2016-01-07T23:36:04.633

1@OliverGriffin much better. One last improvement I'd suggest is to take the advice of Zach. I'd recommend editing "Artistic style" to something along the lines of how well the colors mix with each other in respect to their shapes. I could randomly color each area, but if I could identify the "fence" and make it similarly colored, then that's something that deserves upvotes. – Nathan Merrill – 2016-01-08T00:25:21.123

@ZachGates all due respect, it is tagged as a popularity contest, so it's obvious that opinion will vary from person to person. Taking your other advice into account, I attempted to clarify the input/output specs and an objective winning criterion. I hope this satisfies your needs enough to reopen the post, but if you have other suggestions, feel free to mention them. At the end of the day, I view this as a fun activity and consider anyone who partakes a winner. – OliverGriffin – 2016-01-08T01:27:29.283

4I've voted to reopen your question and have changed my -1 to a +1. Good job editing and adding additional information. Also, I applaud you for being so receptive to community criticism. Welcome to PPCG! Hope you enjoy it. – Zach Gates – 2016-01-08T01:58:35.423

@ZachGates thank you. I am eager to see what answers people can come up with; though I must question, is anybody actually working towards an answer? – OliverGriffin – 2016-01-08T02:21:10.647

With the new "applicability of colour" criterion, I think new, simpler test cases might be useful. I doubt most people would account for dragon-belly-white if their program found an oval-shaped area to fill. – Sp3000 – 2016-01-08T07:13:05.470

@Sp3000 sorry, i barely caught a word of that. Could you please rephrase? – OliverGriffin – 2016-01-08T07:29:05.517

Basically I mean that I think the example images are fairly complex to guess a reasonable colour scheme for, and that adding a few simpler test cases would be good (also 3/5 images consist of a sole mythical creature, and I think it'd be better if there's more variety in the images) – Sp3000 – 2016-01-08T07:40:34.500

@Sp3000 It wasn't my intention to use so many mythical creatures, I did simply choose random creative common images from the web. I added a tree and a chicken for diversity. I was thinking along the lines of grouping bounding boxes that would use a certain colour, thus giving the programmer control if some time were dedicated towards the 'colouring' aspect rather than just the coding. Still, the answerer could use a different image if that would make it easier. – OliverGriffin – 2016-01-08T08:22:23.107

I don't get the point of smallest outputted image. 1x1 pixel image is the winner? – edc65 – 2016-01-08T08:34:32.373

If all the images are Creative Commons, you will also need to state which Creative Commons license applies and provide attribution for each image (it's fine to post them here but all Creative Commons licenses require attribution). – trichoplax – 2016-01-11T00:37:27.897

1Also, providing attribution linking back to the original material gives people here the opportunity to let the original artists know about this question - I imagine they might like to see what their work has gone on to inspire :) – trichoplax – 2016-01-11T00:41:48.730

1@trichoplax thanks that's a very good point, and (I hope) a great idea for the artists! I had the links but I seem to have lost them. I will reverse image search to find the pages I got them from then post the links. Although my initial problem was that my reputation wasn't high enough to post all of the links but given how many upvotes the question got (thank you) I should now be able to. Erm, yes it does refer to file size, I thought I changed it to clarify in the original post after seeing that comment. Should I also have replied to inform him of the changes? – OliverGriffin – 2016-01-11T00:50:20.133

No it's good that you updated the question - then everyone can understand without reading all the comments. I should have noticed the question had been updated - I'll delete my comment... – trichoplax – 2016-01-11T00:52:10.353