16

2

Introduction

In this challenge, you are given a list of nonnegative floating point numbers drawn independently from some probability distribution. Your task is to infer that distribution from the numbers. To make the challenge feasible, you only have five distributions to choose from.

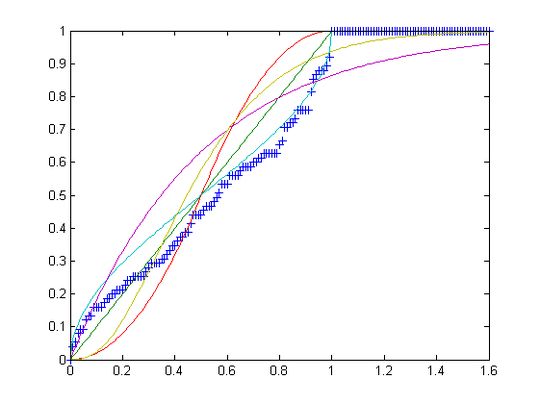

U, the uniform distribution on the interval [0,1].T, the triangular distribution on the interval [0,1] with mode c = 1/2.B, the beta distribution on the interval [0,1] with parameters α = β = 1/2.E, the exponential distribution on the interval [0,∞) with rate λ = 2.G, the gamma distribution on the interval [0,∞) with parameters k = 3 and θ = 1/6.

Note that all of the above distributions have mean exactly 1/2.

The Task

Your input is an array of nonnegative floating point numbers, of length between 75 and 100 inclusive.

Your output shall be one of the letters UTBEG, based on which of the above distributions you guess the numbers are drawn from.

Rules and Scoring

You can give either a full program or a function. Standard loopholes are disallowed.

In this repository, there are five text files, one for each distribution, each exactly 100 lines long. Each line contains a comma-delimited list of 75 to 100 floats drawn independently from the distribution and truncated to 7 digits after the decimal point. You can modify the delimiters to match your language's native array format. To qualify as an answer, your program should correctly classify at least 50 lists from each file. The score of a valid answer is byte count + total number of misclassified lists. The lowest score wins.

I probably should have asked earlier, but how much optimization towards the test cases is expected? I'm at a point where I can improve my score by tweaking a few parameters, but the impact on the score will probably depend on the given test cases. – Dennis – 2015-11-03T19:38:16.323

2@Dennis You can optimize as much as you want, the test cases are a fixed part of the challenge. – Zgarb – 2015-11-03T19:55:22.650

Y U NO Student-t distribution? =( – N3buchadnezzar – 2015-11-29T10:30:59.930