20

3

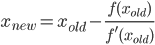

Write a function or complete program that takes a positive number n and performs n steps of an iterative algorithm for calculating π that has quadratic convergence (i.e. it approximately doubles the number of accurate digits at every iteration) then returns or prints out 2n correct digits (including the beginning 3). One such algorithm is the Gauss–Legendre algorithm, but you are free to use a different algorithm if you prefer.

Examples:

input 1 → output 3.1

input 2 → output 3.141

input 5 → output 3.1415926535897932384626433832795

Requirements:

- Each iteration of the algorithm must perform a constant number of basic operations such as addition, subtraction, multiplication, division, power and root (with integer exponent/degree) - each such operation on "big" integer/decimal numbers is counted as one even if it involves one or more loops internally. To be clear, trigonometric functions and powers involving complex numbers are not basic operations.

- The algorithm is expected to have an initialization step which must also have a constant number of operations.

- If the algorithm needs 1 or 2 more iterations to get to 2n correct digits, you can perform up to

n+2iterations instead of justn. - If it wasn't clear enough, after the correct 2n digits, your program must not print anything else (such as more correct digits, wrong digits or the complete works of Shakespeare).

- Your program must support values of

nfrom 1 to at least 20. - Your program should not take more than an hour for

n=20 on a modern computer (not a hard rule, but try to keep it reasonable). - The program must not obtain more than 20 accurate digits after the initialization and first iteration of the algorithm.

- The program must be runnable in Linux using freely available software.

- The source code must use only ASCII characters.

Scoring:

Straightforward code golf, shortest code wins.

Winner:

The winner is Digital Trauma, I finally finished running his code on n=20 (just kidding). Special prize goes to primo for his very fast python solution and different algorithm :)

1Quadratic convergence is error ~ N^(1/2). What you describe is exponential convergence error ~ 2^(-N). – yo' – 2015-03-18T11:26:27.583

@yo' are you saying that wikipedia is wrong?

– aditsu quit because SE is EVIL – 2015-03-18T19:55:51.380@yo' binary digits? What? No, you should compute pi in whatever form you want with 2^n correct decimal digits and output them in decimal representation. – aditsu quit because SE is EVIL – 2015-03-18T19:58:20.603

1Misleading, at least to say: "quadratic convergence" is

~q^(n^2)according to the 1st section there and~q^2according to the 2nd section there. – yo' – 2015-03-18T20:03:11.6371I don't understand codegolf: surely anyone could just write their own programming language specifically for a single task like this, then write a program of, say, 0 bytes? – theonlygusti – 2015-03-18T20:52:34.390

2

@theonlygusti that would be a standard loophole and would get disqualified

– aditsu quit because SE is EVIL – 2015-03-18T20:54:55.643@yo' I didn't change the conditions, I only clarified what should have been obvious but apparently wasn't. Also, your answer was not the only one that doesn't meet the requirements. – aditsu quit because SE is EVIL – 2015-03-25T10:16:07.167

@yo'

exp(I*p).Imag()is just a long-winded way to writesin(p). That this was excluded is evident from the original problem statement. Why not just useacos(-1)? – primo – 2015-03-25T11:15:09.360