C# + OpenCvSharp, Score: 2

This is my second attempt. It is quite different from my first attempt, which is a lot simpler, so I am posting it as a separate solution.

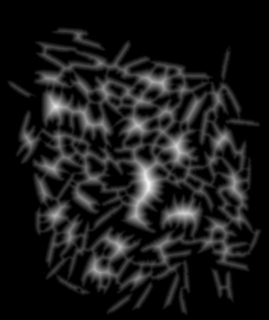

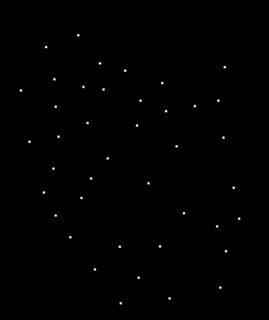

The basic idea is to identify and label each individual grain by an iterative ellipse fit. Then remove the pixels for this grain from the source, and try to find the next grain, until every pixel has been labeled.

This is not the most pretty solution. It is a giant hog with 600 lines of code.

It needs 1.5 minutes for the biggest image. And I really apologize for the messy code.

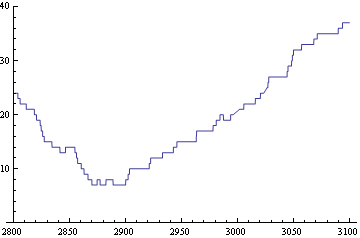

There are so many parameters and ways to thinker in this thing that I am quite afraid of overfitting my program for the 10 sample images. The final score of 2 is almost definitely a case of overfitting: I have two parameters, average grain size in pixel, and minimum ratio of pixel / elipse_area, and at the end I simply exhausted all combinations of these two parameters until I got the lowest score. I am not sure whether this is all that kosher with the rules of this challenge.

average_grain_size_in_pixel = 2530

pixel / elipse_area >= 0.73

But even without these overfitting clutches, the results are quite nice.

Without a fixed grain size or pixel-ratio, simply by estimating the average grain size from the training images, the score is still 27.

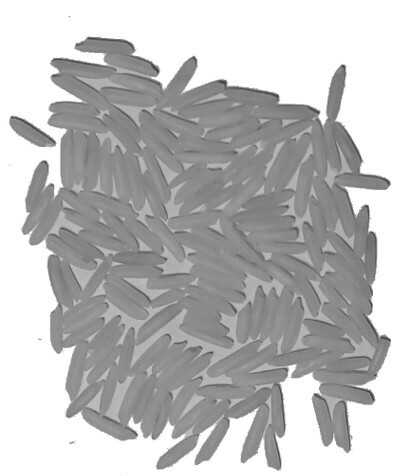

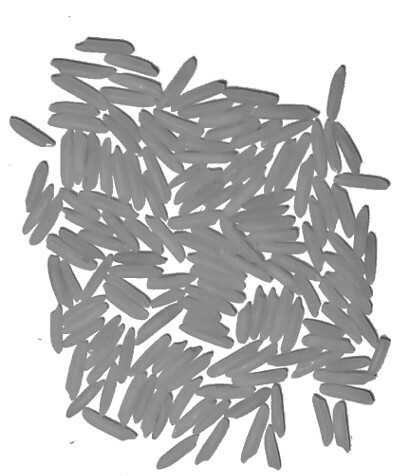

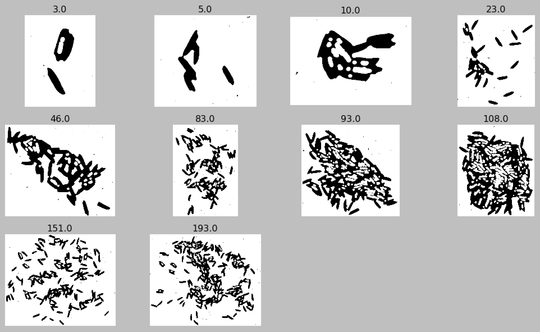

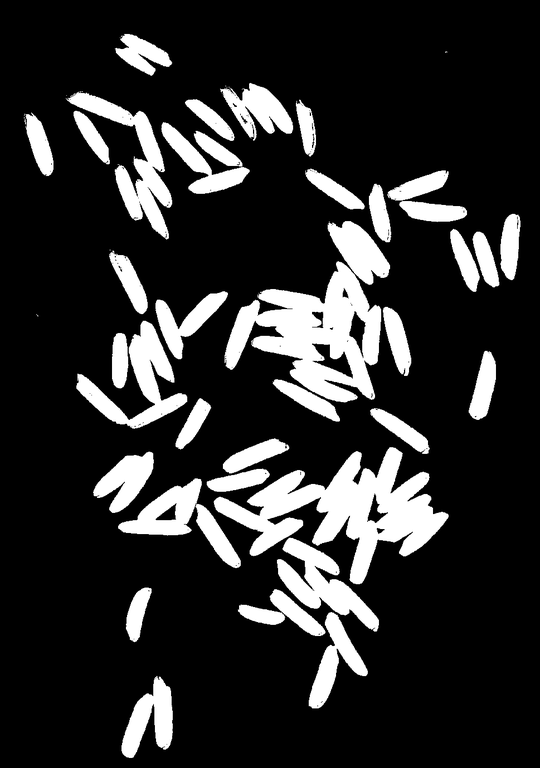

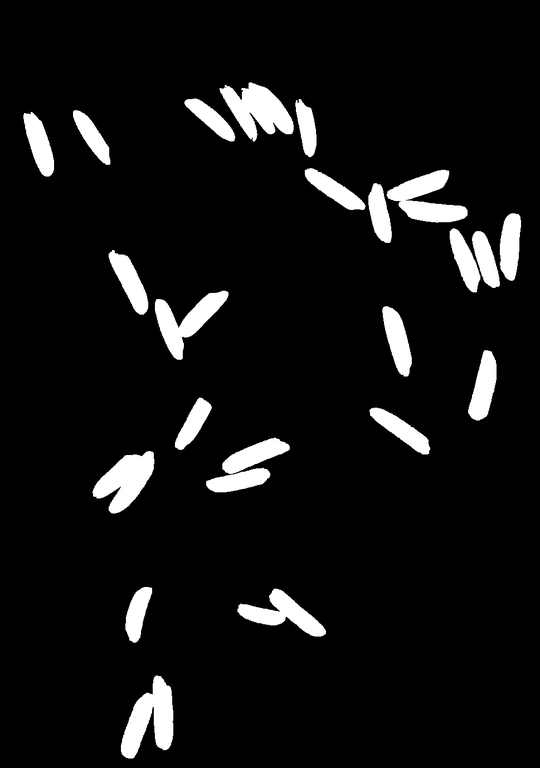

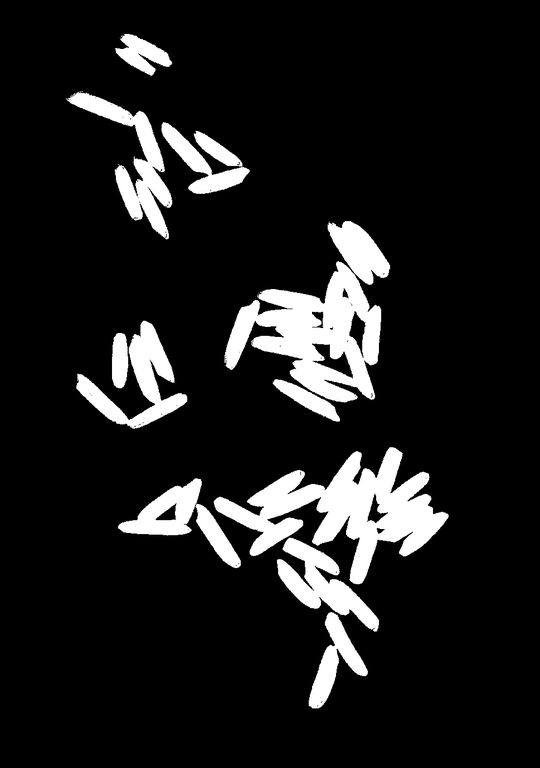

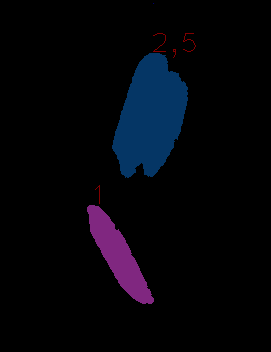

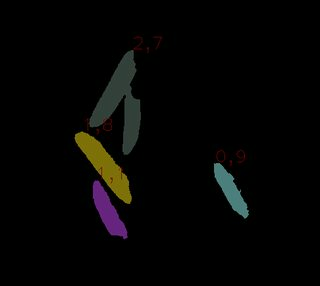

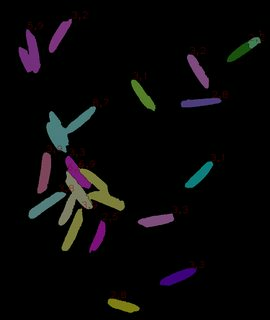

And I get as the output not only the number, but the actual position, orientation and shape of each grain. there are a small number of mislabeled grains, but overall most of the labels accurately match the real grains:

A B

B C

C D

D E

E

F G

G H

H I

I J

J

(click on each image for the full-sized version)

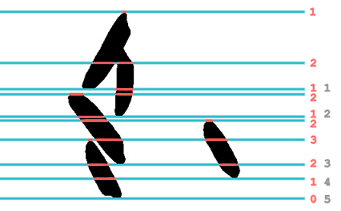

After this labeling step, my program looks at each individual grain, and estimates based on the number of pixels and the pixel/ellipse-area ratio, whether this is

- a single grain (+1)

- multiple grains mislabeled as one (+X)

- a blob too small to be a grain (+0)

The error scores for each image are

A:0; B:0; C:0; D:0; E:2; F:0; G:0 ; H:0; I:0, J:0

However the actual error is probably a little higher. Some errors within the same image cancel each other out. Image H in particular has some badly mislabeled grains, whereas in image E the labels are mostly correct

The concept is a little contrived:

One of my main problems was that I did not want to implement a full ellipse-point distance metric, since calculating that in itself is a complicated iterative process. So I used various workarounds using the OpenCV functions Ellipse2Poly and FitEllipse, and the results are not too pretty.

Apparently i also broke the size limit for codegolf.

An answer is limited to 30000 characters, i am currently at 34000. So I'll have to shorten the code below somewhat.

The full code can be seen at http://pastebin.com/RgM7hMxq

Sorry for this, I was not aware that there was a size limit.

class Program

{

static void Main(string[] args)

{

// Due to size constraints, I removed the inital part of my program that does background separation. For the full source, check the link, or see my previous program.

// list of recognized grains

List<Grain> grains = new List<Grain>();

Random rand = new Random(4); // determined by fair dice throw, guaranteed to be random

// repeat until we have found all grains (to a maximum of 10000)

for (int numIterations = 0; numIterations < 10000; numIterations++ )

{

// erode the image of the remaining foreground pixels, only big blobs can be grains

foreground.Erode(erodedForeground,null,7);

// pick a number of starting points to fit grains

List<CvPoint> startPoints = new List<CvPoint>();

using (CvMemStorage storage = new CvMemStorage())

using (CvContourScanner scanner = new CvContourScanner(erodedForeground, storage, CvContour.SizeOf, ContourRetrieval.List, ContourChain.ApproxNone))

{

if (!scanner.Any()) break; // no grains left, finished!

// search for grains within the biggest blob first (this is arbitrary)

var biggestBlob = scanner.OrderByDescending(c => c.Count()).First();

// pick 10 random edge pixels

for (int i = 0; i < 10; i++)

{

startPoints.Add(biggestBlob.ElementAt(rand.Next(biggestBlob.Count())).Value);

}

}

// for each starting point, try to fit a grain there

ConcurrentBag<Grain> candidates = new ConcurrentBag<Grain>();

Parallel.ForEach(startPoints, point =>

{

Grain candidate = new Grain(point);

candidate.Fit(foreground);

candidates.Add(candidate);

});

Grain grain = candidates

.OrderByDescending(g=>g.Converged) // we don't want grains where the iterative fit did not finish

.ThenBy(g=>g.IsTooSmall) // we don't want tiny grains

.ThenByDescending(g => g.CircumferenceRatio) // we want grains that have many edge pixels close to the fitted elipse

.ThenBy(g => g.MeanSquaredError)

.First(); // we only want the best fit among the 10 candidates

// count the number of foreground pixels this grain has

grain.CountPixel(foreground);

// remove the grain from the foreground

grain.Draw(foreground,CvColor.Black);

// add the grain to the colection fo found grains

grains.Add(grain);

grain.Index = grains.Count;

// draw the grain for visualisation

grain.Draw(display, CvColor.Random());

grain.DrawContour(display, CvColor.Random());

grain.DrawEllipse(display, CvColor.Random());

//display.SaveImage("10-foundGrains.png");

}

// throw away really bad grains

grains = grains.Where(g => g.PixelRatio >= 0.73).ToList();

// estimate the average grain size, ignoring outliers

double avgGrainSize =

grains.OrderBy(g => g.NumPixel).Skip(grains.Count/10).Take(grains.Count*9/10).Average(g => g.NumPixel);

//ignore the estimated grain size, use a fixed size

avgGrainSize = 2530;

// count the number of grains, using the average grain size

double numGrains = grains.Sum(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize));

// get some statistics

double avgWidth = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) == 1).Average(g => g.Width);

double avgHeight = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) == 1).Average(g => g.Height);

double avgPixelRatio = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) == 1).Average(g => g.PixelRatio);

int numUndersized = grains.Count(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) < 1);

int numOversized = grains.Count(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) > 1);

double avgWidthUndersized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) < 1).Select(g=>g.Width).DefaultIfEmpty(0).Average();

double avgHeightUndersized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) < 1).Select(g => g.Height).DefaultIfEmpty(0).Average();

double avgGrainSizeUndersized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) < 1).Select(g => g.NumPixel).DefaultIfEmpty(0).Average();

double avgPixelRatioUndersized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) < 1).Select(g => g.PixelRatio).DefaultIfEmpty(0).Average();

double avgWidthOversized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) > 1).Select(g => g.Width).DefaultIfEmpty(0).Average();

double avgHeightOversized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) > 1).Select(g => g.Height).DefaultIfEmpty(0).Average();

double avgGrainSizeOversized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) > 1).Select(g => g.NumPixel).DefaultIfEmpty(0).Average();

double avgPixelRatioOversized = grains.Where(g => Math.Round(g.NumPixel * 1.0 / avgGrainSize) > 1).Select(g => g.PixelRatio).DefaultIfEmpty(0).Average();

Console.WriteLine("===============================");

Console.WriteLine("Grains: {0}|{1:0.} of {2} (e{3}), size {4:0.}px, {5:0.}x{6:0.} {7:0.000} undersized:{8} oversized:{9} {10:0.0} minutes {11:0.0} s per grain",grains.Count,numGrains,expectedGrains[fileNo],expectedGrains[fileNo]-numGrains,avgGrainSize,avgWidth,avgHeight, avgPixelRatio,numUndersized,numOversized,watch.Elapsed.TotalMinutes, watch.Elapsed.TotalSeconds/grains.Count);

// draw the description for each grain

foreach (Grain grain in grains)

{

grain.DrawText(avgGrainSize, display, CvColor.Black);

}

display.SaveImage("10-foundGrains.png");

display.SaveImage("X-" + file + "-foundgrains.png");

}

}

}

}

public class Grain

{

private const int MIN_WIDTH = 70;

private const int MAX_WIDTH = 130;

private const int MIN_HEIGHT = 20;

private const int MAX_HEIGHT = 35;

private static CvFont font01 = new CvFont(FontFace.HersheyPlain, 0.5, 1);

private Random random = new Random(4); // determined by fair dice throw; guaranteed to be random

/// <summary> center of grain </summary>

public CvPoint2D32f Position { get; private set; }

/// <summary> Width of grain (always bigger than height)</summary>

public float Width { get; private set; }

/// <summary> Height of grain (always smaller than width)</summary>

public float Height { get; private set; }

public float MinorRadius { get { return this.Height / 2; } }

public float MajorRadius { get { return this.Width / 2; } }

public double Angle { get; private set; }

public double AngleRad { get { return this.Angle * Math.PI / 180; } }

public int Index { get; set; }

public bool Converged { get; private set; }

public int NumIterations { get; private set; }

public double CircumferenceRatio { get; private set; }

public int NumPixel { get; private set; }

public List<EllipsePoint> EdgePoints { get; private set; }

public double MeanSquaredError { get; private set; }

public double PixelRatio { get { return this.NumPixel / (Math.PI * this.MajorRadius * this.MinorRadius); } }

public bool IsTooSmall { get { return this.Width < MIN_WIDTH || this.Height < MIN_HEIGHT; } }

public Grain(CvPoint2D32f position)

{

this.Position = position;

this.Angle = 0;

this.Width = 10;

this.Height = 10;

this.MeanSquaredError = double.MaxValue;

}

/// <summary> fit a single rice grain of elipsoid shape </summary>

public void Fit(CvMat img)

{

// distance between the sampled points on the elipse circumference in degree

int angularResolution = 1;

// how many times did the fitted ellipse not change significantly?

int numConverged = 0;

// number of iterations for this fit

int numIterations;

// repeat until the fitted ellipse does not change anymore, or the maximum number of iterations is reached

for (numIterations = 0; numIterations < 100 && !this.Converged; numIterations++)

{

// points on an ideal ellipse

CvPoint[] points;

Cv.Ellipse2Poly(this.Position, new CvSize2D32f(MajorRadius, MinorRadius), Convert.ToInt32(this.Angle), 0, 359, out points,

angularResolution);

// points on the edge of foregroudn to background, that are close to the elipse

CvPoint?[] edgePoints = new CvPoint?[points.Length];

// remeber if the previous pixel in a given direction was foreground or background

bool[] prevPixelWasForeground = new bool[points.Length];

// when the first edge pixel is found, this value is updated

double firstEdgePixelOffset = 200;

// from the center of the elipse towards the outside:

for (float offset = -this.MajorRadius + 1; offset < firstEdgePixelOffset + 20; offset++)

{

// draw an ellipse with the given offset

Cv.Ellipse2Poly(this.Position, new CvSize2D32f(MajorRadius + offset, MinorRadius + (offset > 0 ? offset : MinorRadius / MajorRadius * offset)), Convert.ToInt32(this.Angle), 0,

359, out points, angularResolution);

// for each angle

Parallel.For(0, points.Length, i =>

{

if (edgePoints[i].HasValue) return; // edge for this angle already found

// check if the current pixel is foreground

bool foreground = points[i].X < 0 || points[i].Y < 0 || points[i].X >= img.Cols || points[i].Y >= img.Rows

? false // pixel outside of image borders is always background

: img.Get2D(points[i].Y, points[i].X).Val0 > 0;

if (prevPixelWasForeground[i] && !foreground)

{

// found edge pixel!

edgePoints[i] = points[i];

// if this is the first edge pixel we found, remember its offset. the other pixels cannot be too far away, so we can stop searching soon

if (offset < firstEdgePixelOffset && offset > 0) firstEdgePixelOffset = offset;

}

prevPixelWasForeground[i] = foreground;

});

}

// estimate the distance of each found edge pixel from the ideal elipse

// this is a hack, since the actual equations for estimating point-ellipse distnaces are complicated

Cv.Ellipse2Poly(this.Position, new CvSize2D32f(MajorRadius, MinorRadius), Convert.ToInt32(this.Angle), 0, 360,

out points, angularResolution);

var pointswithDistance =

edgePoints.Select((p, i) => p.HasValue ? new EllipsePoint(p.Value, points[i], this.Position) : null)

.Where(p => p != null).ToList();

if (pointswithDistance.Count == 0)

{

Console.WriteLine("no points found! should never happen! ");

break;

}

// throw away all outliers that are too far outside the current ellipse

double medianSignedDistance = pointswithDistance.OrderBy(p => p.SignedDistance).ElementAt(pointswithDistance.Count / 2).SignedDistance;

var goodPoints = pointswithDistance.Where(p => p.SignedDistance < medianSignedDistance + 15).ToList();

// do a sort of ransack fit with the inlier points to find a new better ellipse

CvBox2D bestfit = ellipseRansack(goodPoints);

// check if the fit has converged

if (Math.Abs(this.Angle - bestfit.Angle) < 3 && // angle has not changed much (<3°)

Math.Abs(this.Position.X - bestfit.Center.X) < 3 && // position has not changed much (<3 pixel)

Math.Abs(this.Position.Y - bestfit.Center.Y) < 3)

{

numConverged++;

}

else

{

numConverged = 0;

}

if (numConverged > 2)

{

this.Converged = true;

}

//Console.WriteLine("Iteration {0}, delta {1:0.000} {2:0.000} {3:0.000} {4:0.000}-{5:0.000} {6:0.000}-{7:0.000} {8:0.000}-{9:0.000}",

// numIterations, Math.Abs(this.Angle - bestfit.Angle), Math.Abs(this.Position.X - bestfit.Center.X), Math.Abs(this.Position.Y - bestfit.Center.Y), this.Angle, bestfit.Angle, this.Position.X, bestfit.Center.X, this.Position.Y, bestfit.Center.Y);

double msr = goodPoints.Sum(p => p.Distance * p.Distance) / goodPoints.Count;

// for drawing the polygon, filter the edge points more strongly

if (goodPoints.Count(p => p.SignedDistance < 5) > goodPoints.Count / 2)

goodPoints = goodPoints.Where(p => p.SignedDistance < 5).ToList();

double cutoff = goodPoints.Select(p => p.Distance).OrderBy(d => d).ElementAt(goodPoints.Count * 9 / 10);

goodPoints = goodPoints.Where(p => p.SignedDistance <= cutoff + 1).ToList();

int numCertainEdgePoints = goodPoints.Count(p => p.SignedDistance > -2);

this.CircumferenceRatio = numCertainEdgePoints * 1.0 / points.Count();

this.Angle = bestfit.Angle;

this.Position = bestfit.Center;

this.Width = bestfit.Size.Width;

this.Height = bestfit.Size.Height;

this.EdgePoints = goodPoints;

this.MeanSquaredError = msr;

}

this.NumIterations = numIterations;

//Console.WriteLine("Grain found after {0,3} iterations, size={1,3:0.}x{2,3:0.} pixel={3,5} edgePoints={4,3} msr={5,2:0.00000}", numIterations, this.Width,

// this.Height, this.NumPixel, this.EdgePoints.Count, this.MeanSquaredError);

}

/// <summary> a sort of ransakc fit to find the best ellipse for the given points </summary>

private CvBox2D ellipseRansack(List<EllipsePoint> points)

{

using (CvMemStorage storage = new CvMemStorage(0))

{

// calculate minimum bounding rectangle

CvSeq<CvPoint> fullPointSeq = CvSeq<CvPoint>.FromArray(points.Select(p => p.Point), SeqType.EltypePoint, storage);

var boundingRect = fullPointSeq.MinAreaRect2();

// the initial candidate is the previously found ellipse

CvBox2D bestEllipse = new CvBox2D(this.Position, new CvSize2D32f(this.Width, this.Height), (float)this.Angle);

double bestError = calculateEllipseError(points, bestEllipse);

Queue<EllipsePoint> permutation = new Queue<EllipsePoint>();

if (points.Count >= 5) for (int i = -2; i < 20; i++)

{

CvBox2D ellipse;

if (i == -2)

{

// first, try the ellipse described by the boundingg rect

ellipse = boundingRect;

}

else if (i == -1)

{

// then, try the best-fit ellipsethrough all points

ellipse = fullPointSeq.FitEllipse2();

}

else

{

// then, repeatedly fit an ellipse through a random sample of points

// pick some random points

if (permutation.Count < 5) permutation = new Queue<EllipsePoint>(permutation.Concat(points.OrderBy(p => random.Next())));

CvSeq<CvPoint> pointSeq = CvSeq<CvPoint>.FromArray(permutation.Take(10).Select(p => p.Point), SeqType.EltypePoint, storage);

for (int j = 0; j < pointSeq.Count(); j++) permutation.Dequeue();

// fit an ellipse through these points

ellipse = pointSeq.FitEllipse2();

}

// assure that the width is greater than the height

ellipse = NormalizeEllipse(ellipse);

// if the ellipse is too big for agrain, shrink it

ellipse = rightSize(ellipse, points.Where(p => isOnEllipse(p.Point, ellipse, 10, 10)).ToList());

// sometimes the ellipse given by FitEllipse2 is totally off

if (boundingRect.Center.DistanceTo(ellipse.Center) > Math.Max(boundingRect.Size.Width, boundingRect.Size.Height) * 2)

{

// ignore this bad fit

continue;

}

// estimate the error

double error = calculateEllipseError(points, ellipse);

if (error < bestError)

{

// found a better ellipse!

bestError = error;

bestEllipse = ellipse;

}

}

return bestEllipse;

}

}

/// <summary> The proper thing to do would be to use the actual distance of each point to the elipse.

/// However that formula is complicated, so ... </summary>

private double calculateEllipseError(List<EllipsePoint> points, CvBox2D ellipse)

{

const double toleranceInner = 5;

const double toleranceOuter = 10;

int numWrongPoints = points.Count(p => !isOnEllipse(p.Point, ellipse, toleranceInner, toleranceOuter));

double ratioWrongPoints = numWrongPoints * 1.0 / points.Count;

int numTotallyWrongPoints = points.Count(p => !isOnEllipse(p.Point, ellipse, 10, 20));

double ratioTotallyWrongPoints = numTotallyWrongPoints * 1.0 / points.Count;

// this pseudo-distance is biased towards deviations on the major axis

double pseudoDistance = Math.Sqrt(points.Sum(p => Math.Abs(1 - ellipseMetric(p.Point, ellipse))) / points.Count);

// primarily take the number of points far from the elipse border as an error metric.

// use pseudo-distance to break ties between elipses with the same number of wrong points

return ratioWrongPoints * 1000 + ratioTotallyWrongPoints+ pseudoDistance / 1000;

}

/// <summary> shrink an ellipse if it is larger than the maximum grain dimensions </summary>

private static CvBox2D rightSize(CvBox2D ellipse, List<EllipsePoint> points)

{

if (ellipse.Size.Width < MAX_WIDTH && ellipse.Size.Height < MAX_HEIGHT) return ellipse;

// elipse is bigger than the maximum grain size

// resize it so it fits, while keeping one edge of the bounding rectangle constant

double desiredWidth = Math.Max(10, Math.Min(MAX_WIDTH, ellipse.Size.Width));

double desiredHeight = Math.Max(10, Math.Min(MAX_HEIGHT, ellipse.Size.Height));

CvPoint2D32f average = points.Average();

// get the corners of the surrounding bounding box

var corners = ellipse.BoxPoints().ToList();

// find the corner that is closest to the center of mass of the points

int i0 = ellipse.BoxPoints().Select((point, index) => new { point, index }).OrderBy(p => p.point.DistanceTo(average)).First().index;

CvPoint p0 = corners[i0];

// find the two corners that are neighbouring this one

CvPoint p1 = corners[(i0 + 1) % 4];

CvPoint p2 = corners[(i0 + 3) % 4];

// p1 is the next corner along the major axis (widht), p2 is the next corner along the minor axis (height)

if (p0.DistanceTo(p1) < p0.DistanceTo(p2))

{

CvPoint swap = p1;

p1 = p2;

p2 = swap;

}

// calculate the three other corners with the desired widht and height

CvPoint2D32f edge1 = (p1 - p0);

CvPoint2D32f edge2 = p2 - p0;

double edge1Length = Math.Max(0.0001, p0.DistanceTo(p1));

double edge2Length = Math.Max(0.0001, p0.DistanceTo(p2));

CvPoint2D32f newCenter = (CvPoint2D32f)p0 + edge1 * (desiredWidth / edge1Length) + edge2 * (desiredHeight / edge2Length);

CvBox2D smallEllipse = new CvBox2D(newCenter, new CvSize2D32f((float)desiredWidth, (float)desiredHeight), ellipse.Angle);

return smallEllipse;

}

/// <summary> assure that the width of the elipse is the major axis, and the height is the minor axis.

/// Swap widht/height and rotate by 90° otherwise </summary>

private static CvBox2D NormalizeEllipse(CvBox2D ellipse)

{

if (ellipse.Size.Width < ellipse.Size.Height)

{

ellipse = new CvBox2D(ellipse.Center, new CvSize2D32f(ellipse.Size.Height, ellipse.Size.Width), (ellipse.Angle + 90 + 360) % 360);

}

return ellipse;

}

/// <summary> greater than 1 for points outside ellipse, smaller than 1 for points inside ellipse </summary>

private static double ellipseMetric(CvPoint p, CvBox2D ellipse)

{

double theta = ellipse.Angle * Math.PI / 180;

double u = Math.Cos(theta) * (p.X - ellipse.Center.X) + Math.Sin(theta) * (p.Y - ellipse.Center.Y);

double v = -Math.Sin(theta) * (p.X - ellipse.Center.X) + Math.Cos(theta) * (p.Y - ellipse.Center.Y);

return u * u / (ellipse.Size.Width * ellipse.Size.Width / 4) + v * v / (ellipse.Size.Height * ellipse.Size.Height / 4);

}

/// <summary> Is the point on the ellipseBorder, within a certain tolerance </summary>

private static bool isOnEllipse(CvPoint p, CvBox2D ellipse, double toleranceInner, double toleranceOuter)

{

double theta = ellipse.Angle * Math.PI / 180;

double u = Math.Cos(theta) * (p.X - ellipse.Center.X) + Math.Sin(theta) * (p.Y - ellipse.Center.Y);

double v = -Math.Sin(theta) * (p.X - ellipse.Center.X) + Math.Cos(theta) * (p.Y - ellipse.Center.Y);

double innerEllipseMajor = (ellipse.Size.Width - toleranceInner) / 2;

double innerEllipseMinor = (ellipse.Size.Height - toleranceInner) / 2;

double outerEllipseMajor = (ellipse.Size.Width + toleranceOuter) / 2;

double outerEllipseMinor = (ellipse.Size.Height + toleranceOuter) / 2;

double inside = u * u / (innerEllipseMajor * innerEllipseMajor) + v * v / (innerEllipseMinor * innerEllipseMinor);

double outside = u * u / (outerEllipseMajor * outerEllipseMajor) + v * v / (outerEllipseMinor * outerEllipseMinor);

return inside >= 1 && outside <= 1;

}

/// <summary> count the number of foreground pixels for this grain </summary>

public int CountPixel(CvMat img)

{

// todo: this is an incredibly inefficient way to count, allocating a new image with the size of the input each time

using (CvMat mask = new CvMat(img.Rows, img.Cols, MatrixType.U8C1))

{

mask.SetZero();

mask.FillPoly(new CvPoint[][] { this.EdgePoints.Select(p => p.Point).ToArray() }, CvColor.White);

mask.And(img, mask);

this.NumPixel = mask.CountNonZero();

}

return this.NumPixel;

}

/// <summary> draw the recognized shape of the grain </summary>

public void Draw(CvMat img, CvColor color)

{

img.FillPoly(new CvPoint[][] { this.EdgePoints.Select(p => p.Point).ToArray() }, color);

}

/// <summary> draw the contours of the grain </summary>

public void DrawContour(CvMat img, CvColor color)

{

img.DrawPolyLine(new CvPoint[][] { this.EdgePoints.Select(p => p.Point).ToArray() }, true, color);

}

/// <summary> draw the best-fit ellipse of the grain </summary>

public void DrawEllipse(CvMat img, CvColor color)

{

img.DrawEllipse(this.Position, new CvSize2D32f(this.MajorRadius, this.MinorRadius), this.Angle, 0, 360, color, 1);

}

/// <summary> print the grain index and the number of pixels divided by the average grain size</summary>

public void DrawText(double averageGrainSize, CvMat img, CvColor color)

{

img.PutText(String.Format("{0}|{1:0.0}", this.Index, this.NumPixel / averageGrainSize), this.Position + new CvPoint2D32f(-5, 10), font01, color);

}

}

I am a little embarrassed with this solution because a) I am not sure whether it is within the spirit of this challenge, and b) it is too big for a codegolf answer and lacks the elegance of the other solutions.

On the other hand, I am quite happy with the progress I achieved in labeling the grains, not merely counting them, so there is that.

-->

-->

1Surely someone has to try scikit-learn! – None – 2014-11-04T13:50:42.760

Great contest! :) Btw - could tell us something about end date of this challenge? – cyriel – 2014-11-05T03:58:34.817

@cyriel Probably sometime next week when there are no more additional submissions. – Calvin's Hobbies – 2014-11-05T04:33:31.033

I think this question deserves a bounty first! The best score is down to 9 now. – None – 2014-11-05T09:45:30.627

1@Lembik Down to 7 :) – Dr. belisarius – 2014-11-05T15:09:49.830

6One day, a rice scientist is going to come along and be head over heels happy that this question exists. – Nit – 2014-11-05T17:19:26.647

2

@Nit Just tell them http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3510117/ :)

– Dr. belisarius – 2014-11-05T17:27:43.957