Fortran

Okay, I'm using an obscure image format called FITS which is used for astronomy. This means there is a Fortran library for reading and writing such images. Also, ImageMagick and Gimp can both read/write FITS images.

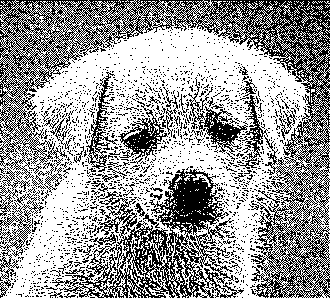

The algorithm I use is based on "Sierra Lite" dithering, but with two improvements:

a) I reduce the propagated error by a factor 4/5.

b) I introduce a random variation in the diffusion matrix while keeping its sum constant.

Together these almost completely elminiate the patterns seen in OPs example.

Assuming you have the CFITSIO library installed, compile with

gfortran -lcfitsio dither.f90

The file names are hard-coded (couldn't be bothered to fix this).

Code:

program dither

integer :: status,unit,readwrite,blocksize,naxes(2),nfound

integer :: group,npixels,bitpix,naxis,i,j,fpixel,un

real :: nullval,diff_mat(3,2),perr

real, allocatable :: image(:,:), error(:,:)

integer, allocatable :: seed(:)

logical :: anynull,simple,extend

character(len=80) :: filename

call random_seed(size=Nrand)

allocate(seed(Nrand))

open(newunit=un,file="/dev/urandom",access="stream",&

form="unformatted",action="read",status="old")

read(un) seed

close(un)

call random_seed(put=seed)

deallocate(seed)

status=0

call ftgiou(unit,status)

filename='PUPPY.FITS'

readwrite=0

call ftopen(unit,filename,readwrite,blocksize,status)

call ftgknj(unit,'NAXIS',1,2,naxes,nfound,status)

call ftgidt(unit,bitpix,status)

npixels=naxes(1)*naxes(2)

group=1

nullval=-999

allocate(image(naxes(1),naxes(2)))

allocate(error(naxes(1)+1,naxes(2)+1))

call ftgpve(unit,group,1,npixels,nullval,image,anynull,status)

call ftclos(unit, status)

call ftfiou(unit, status)

diff_mat=0.0

diff_mat(3,1) = 2.0

diff_mat(1,2) = 1.0

diff_mat(2,2) = 1.0

diff_mat=diff_mat/5.0

error=0.0

perr=0

do j=1,naxes(2)

do i=1,naxes(1)

p=max(min(image(i,j)+error(i,j),255.0),0.0)

if (p < 127.0) then

perr=p

image(i,j)=0.0

else

perr=p-255.0

image(i,j)=255.0

endif

call random_number(r)

r=0.6*(r-0.5)

error(i+1,j)= error(i+1,j) +perr*(diff_mat(3,1)+r)

error(i-1,j+1)=error(i-1,j+1)+perr*diff_mat(1,2)

error(i ,j+1)=error(i ,j+1) +perr*(diff_mat(2,2)-r)

end do

end do

call ftgiou(unit,status)

blocksize=1

filename='PUPPY-OUT.FITS'

call ftinit(unit,filename,blocksize,status)

simple=.true.

naxis=2

extend=.true.

call ftphpr(unit,simple,bitpix,naxis,naxes,0,1,extend,status)

group=1

fpixel=1

call ftppre(unit,group,fpixel,npixels,image,status)

call ftclos(unit, status)

call ftfiou(unit, status)

deallocate(image)

deallocate(error)

end program dither

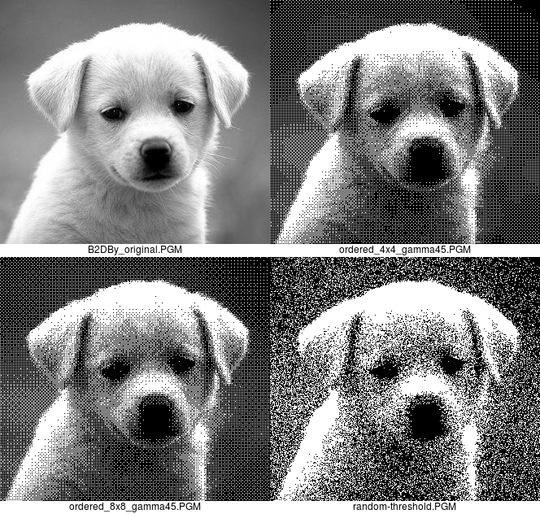

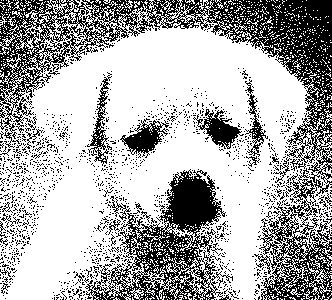

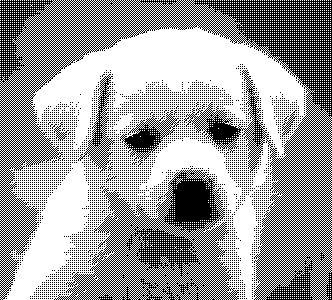

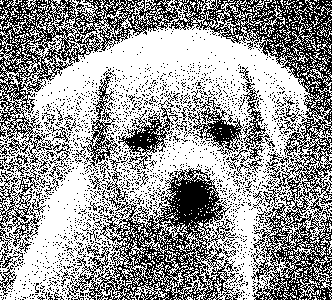

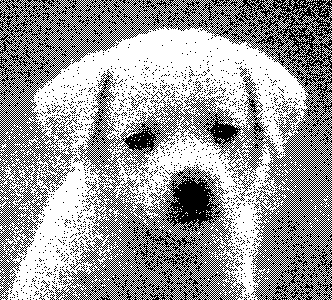

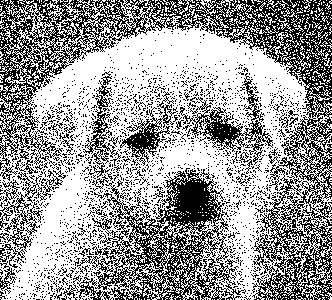

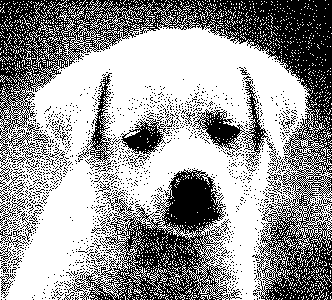

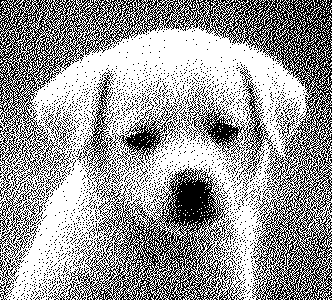

Example output for the puppy image in OPs post:

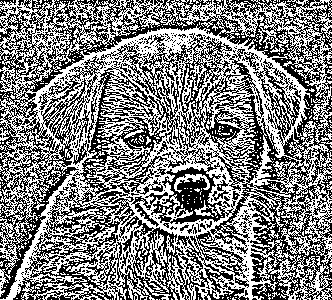

OPs example output:

@A.L I was hoping to see an answer to this. I've done some searching and found that it was a popular "wallpaper" distributed since about 2007 by "Crazy-Frankenstein.com", and I found a copy in a blog archive of June 2005 http://valentinedayisu.blogspot.com/2005/06/canine-wallpaper.html, without the craxy-frankenstein watermark.

– Glenn Randers-Pehrson – 2017-01-03T01:55:07.230Hm. Without any restriction on the code what prevents people from just implementing some established algorithm that is known to give "the best" results? – Martin Ender – 2014-05-03T21:24:43.617

@m.buettner I thought about this but I couldn't come up with a good solution. I don't want to do byte count, because that would give some languages a large advantage. Maybe speed and memory usage. – qwr – 2014-05-03T21:29:14.423

4+1 for an interesting challenge, but I think this would be much better as a [code-golf] (with a spec) or some other completely objective criterion. – Doorknob – 2014-05-03T21:30:31.353

2The problem with code-size, speed and memory usage is that you'd need an objective threshold for how recognisable the result must be for the answer to be valid, which is also quite impossible. Popularity contest does make sense, but without any restriction on the code there is no incentive for people to think out of the box. I'd rather upvote a clever answer than one gives the best result because it just implemented an existing algorithm. But you are currently incentivising the latter. – Martin Ender – 2014-05-03T21:31:05.377

@m.buettner I've edited the criteria so a new algorithm has to be made and creativity is taken into account. This doesn't stop people from slightly modifying an existing algorithm, but I hope it encourages creativity (every realistic way to dither has already been developed) – qwr – 2014-05-03T21:37:38.547

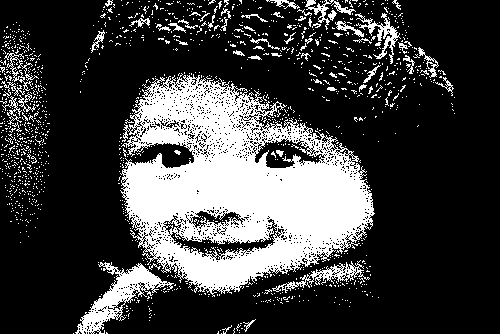

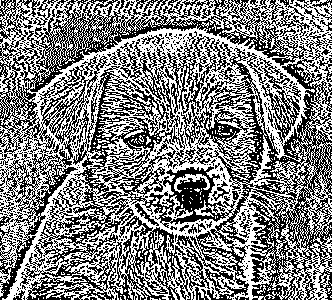

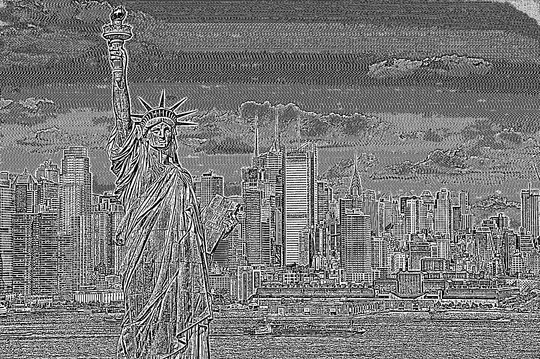

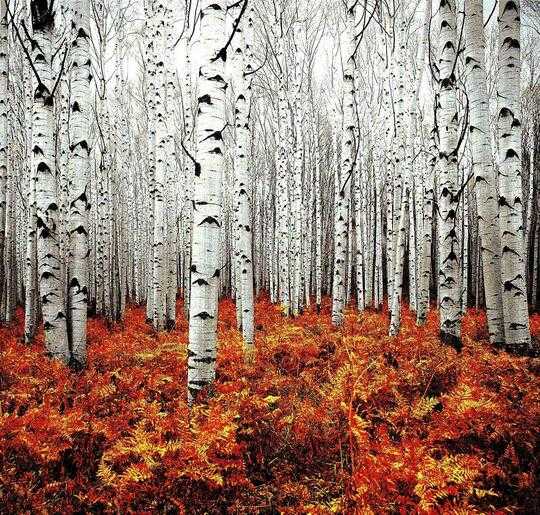

As a reference, a simple mean threshold of this image is here. Technically, that counts as dithering, according to Wikipedia.

– Geobits – 2014-05-04T00:05:05.3333The line between an algorithm and its technique is too thin to determine which side something falls. – Peter Taylor – 2014-05-04T05:55:42.207

2I think it would be a lot easier to compare the results if they all showed results from the same image. – joeytwiddle – 2014-05-04T17:55:41.587

1@Doorknob I disagree. Image quality is, by definition, subjective. If you put a code-golf tag on this, you will end up with a load of answers giving 50% black/white threshold. It's a rare example of correct application of the popularity-contest tag (i.e, when there is no way of judging objectively.) – Level River St – 2014-05-04T19:31:02.070

@steveverrill By "with a spec" I mean "with an exact specification of what the output should be, i.e. every solution produces the same output." – Doorknob – 2014-05-04T19:31:53.950

1

@Doorknob defining the output would be both difficult and limiting. Much of what makes image processing interesting is that you try an algorithm and you have no idea if it's going to be any good until you see the results. Remember this question? Very broad, 182 upvotes, no downvotes. If every question had a tight spec we would never have seen this. http://codegolf.stackexchange.com/q/22144/15599

– Level River St – 2014-05-04T19:43:40.6273Can you add the source of the image? (I don't think someone will be angry to see its his/her image here, but it's fair to cite the source) – A.L – 2014-05-07T01:57:49.163