Pure Evil: Eval

a=lambda x,y:(y<0)*x or eval("a("*9**9**9+"x**.1"+",y-1)"*9**9**9)

print a(input(),9**9**9**9**9)//1

The statement inside the eval creates a string of length 7*1010101010108.57 which consists of nothing but more calls to the lambda function each of which will construct a string of similar length, on and on until eventually y becomes 0. Ostensibly this has the same complexity as the Eschew method below, but rather than relying on if-and-or control logic, it just smashes giant strings together (and the net result is getting more stacks...probably?).

The largest y value I can supply and compute without Python throwing an error is 2 which is already sufficient to reduce an input of max-float into returning 1.

A string of length 7,625,597,484,987 is just too big: OverflowError: cannot fit 'long' into an index-sized integer.

I should stop.

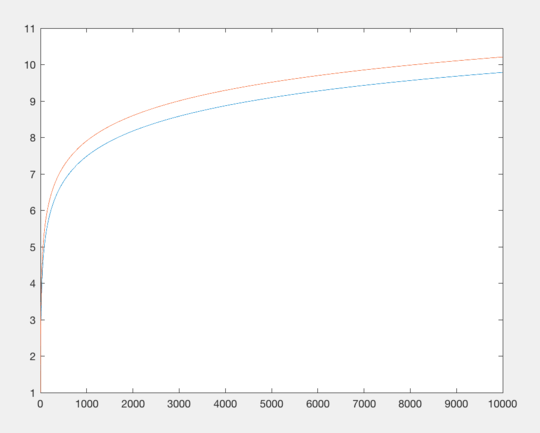

Eschew Math.log: Going to the (10th-)root (of the problem), Score: function effectively indistinguishable from y=1.

Importing the math library is restricting the byte count. Lets do away with that and replace the log(x) function with something roughly equivalent: x**.1 and which costs approximately the same number of characters, but doesn't require the import. Both functions have a sublinear output with respect to input, but x0.1 grows even more slowly. However we don't care a whole lot, we only care that it has the same base growth pattern with respect to large numbers while consuming a comparable number of characters (eg. x**.9 is the same number of characters, but grows more quickly, so there is some value that would exhibit the exact same growth).

Now, what to do with 16 characters. How about...extending our lambda function to have Ackermann Sequence properties? This answer for large numbers inspired this solution.

a=lambda x,y,z:(z<0)*x or y and a(x**.1,z**z,z-1)or a(x**.1,y-1,z)

print a(input(),9,9**9**9**99)//1

The z**z portion here prevents me from running this function with anywhere close to sane inputs for y and z, the largest values I can use are 9 and 3 for which I get back the value of 1.0, even for the largest float Python supports (note: while 1.0 is numerically greater than 6.77538853089e-05, increased recursion levels move the output of this function closer to 1, while remaining greater than 1, whereas the previous function moved values closer to 0 while remaining greater than 0, thus even moderate recursion on this function results in so many operations that the floating point number loses all significant bits).

Re-configuring the original lambda call to have recursion values of 0 and 2...

>>>1.7976931348623157e+308

1.0000000071

If the comparison is made to "offset from 0" instead of "offset from 1" this function returns 7.1e-9, which is definitely smaller than 6.7e-05.

Actual program's base recursion (z value) is 101010101.97 levels deep, as soon as y exhausts itself, it gets reset with 10101010101.97 (which is why an initial value of 9 is sufficient), so I don't even know how to correctly compute the total number of recursions that occur: I've reached the end of my mathematical knowledge. Similarly I don't know if moving one of the **n exponentiations from the initial input to the secondary z**z would improve the number of recursions or not (ditto reverse).

Lets go even slower with even more recursion

import math

a=lambda x,y:(y<0)*x or a(a(a(math.log(x+1),y-1),y-1),y-1)

print a(input(),9**9**9e9)//1

n//1 - saves 2 bytes over int(n)import math,math. saves 1 byte over from math import*a(...) saves 8 bytes total over m(m,...)(y>0)*x saves a byte over y>0and x9**9**99 increases byte count by 4 and increases recursion depth by approximately 2.8 * 10^x where x is the old depth (or a depth nearing a googolplex in size: 101094).9**9**9e9 increases the byte count by 5 and increases recursion depth by...an insane amount. Recursion depth is now 1010109.93, for reference, a googolplex is 1010102.- lambda declaration increases recursion by an extra step:

m(m(...)) to a(a(a(...))) costs 7 bytes

New output value (at 9 recursion depth):

>>>1.7976931348623157e+308

6.77538853089e-05

Recursion depth has exploded to the point at which this result is literally meaningless except in comparison to the earlier results using the same input values:

- The original called

log 25 times

- The first improvement calls it 81 times

- The actual program would call it 1e992 or about 10102.3 times

- This version calls it 729 times

- The actual program would call it (9999)3 or slightly less than 101095 times).

Lambda Inception, score: ???

I heard you like lambdas, so...

from math import*

a=lambda m,x,y:y<0and x or m(m,m(m,log(x+1),y-1),y-1)

print int(a(a,input(),1e99))

I can't even run this, I stack overflow even with a mere 99 layers of recursion.

The old method (below) returns (skipping the conversion to an integer):

>>>1.7976931348623157e+308

0.0909072713593

The new method returns, using only 9 layers of incursion (rather than the full googol of them):

>>>1.7976931348623157e+308

0.00196323936205

I think this works out to be of similar complexity to the Ackerman sequence, only small instead of big.

Also thanks to ETHproductions for a 3-byte savings in spaces I didn't realize could be removed.

Old answer:

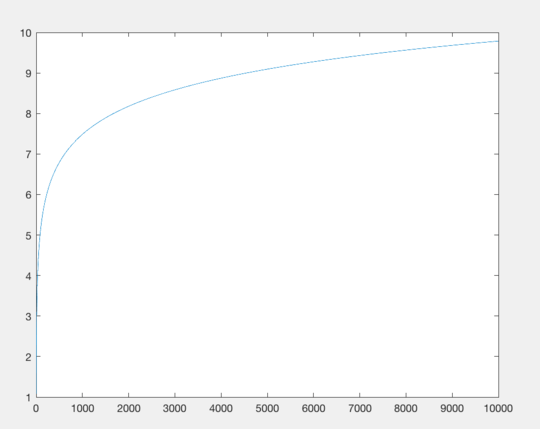

The integer truncation of the function log(i+1) iterated 20 25 times (Python) using lambda'd lambdas.

PyRulez's answer can be compressed by introducing a second lambda and stacking it:

from math import *

x=lambda i:log(i+1)

y=lambda i:x(x(x(x(x(i)))))

print int(y(y(y(y(y(input()))))))

99 100 characters used.

This produces an iteration of 20 25, over the original 12. Additionally it saves 2 characters by using int() instead of floor() which allowed for an additional x() stack. If the spaces after the lambda can be removed (I cannot check at the moment) then a 5th y() can be added. Possible!

If there's a way to skip the from math import by using a fully qualified name (eg. x=lambda i: math.log(i+1))), that would save even more characters and allow for another stack of x() but I don't know if Python supports such things (I suspect not). Done!

This is essentially the same trick used in XCKD's blog post on large numbers, however the overhead in declaring lambdas precludes a third stack:

from math import *

x=lambda i:log(i+1)

y=lambda i:x(x(x(i)))

z=lambda i:y(y(y(i)))

print int(z(z(z(input()))))

This is the smallest recursion possible with 3 lambdas that exceeds the computed stack height of 2 lambdas (reducing any lambda to two calls drops the stack height to 18, below that of the 2-lambda version), but unfortunately requires 110 characters.

3Related. – Martin Ender – 2017-06-08T18:47:34.610

3An effective strategy is to write a fast-growing function and take its inverse, i.e. find the smallest input to it that produces at least the required value. Perhaps this is a dupe? – xnor – 2017-06-08T18:59:52.913

A third of the "More specifically" paragraph was missing because Markdown thinks a

<followed by a letter is the start of an HTML tag. Preview your questions before you post them please :P – ETHproductions – 2017-06-08T20:11:54.3871What large cardinal axioms can we assume? – Peter Taylor – 2017-06-08T20:46:21.927

@Peter Taylor uhm, whichever you want I guess – PyRulez – 2017-06-08T20:47:28.517

1Is the time machine to test our answers provided? – Magic Octopus Urn – 2017-06-09T16:20:52.097

Your definitions of "growing" and "slowest growing" are exceedingly hard for me to understand, and that is made much worse by using both

nandNas different variables. It's gibberish when read aloud. – David Conrad – 2017-06-23T00:14:32.860@DavidConrad essentially, it's asymptotic – PyRulez – 2017-06-23T00:20:31.623

How is this any different than saying "For every K there is an N such that P(N) > K"? Why did you introduce two N's? Just to be confusing? Sorry, I guess I'm just not smart enough to understand this. But naming both terms "N", distinguished only by case, is definitely a bad, bad idea. – David Conrad – 2017-06-23T02:04:32.623

@DavidConrad I can change it if you like (I didn't try reading it out loud). – PyRulez – 2017-06-25T05:19:09.547

Can we ignore 0 as an input? – MilkyWay90 – 2019-04-26T02:48:17.267