23

1

And in particular the second law: the entropy of an isolated system increases over time.

For this challenge,

- An "isolated system" will be taken to be a program or function (abbreviated as "program" from now on);

- The passing of "time" will correspond to iterated executions of the program's output, considered as a new program;

- "Entropy" will be taken as Shannon's first-order entropy (to be defined below), which is a measure of how diverse the characters of the string are.

The challenge

Your program should produce a non-empty string that when executed as a program in the same language produces a string with more entropy than the previous one. Infinitely iterating this execute-the-output process must produce a strictly increasing sequence of entropy values.

The strings can contain any Unicode 9.0 characters. The sequence of strings must be deterministic (as opposed to random).

The entropy for a given string will be defined as follows. Identify its unique characters and their number of occurrences in the string. The frequency pi of the i-th unique character is the number of occurrences of that character divided by the length of the string. The entropy is then

where the sum is over all unique characters of the string. Technically, this corresponds to the entropy of a discrete random variable with distribution given by the frequencies observed in the string.

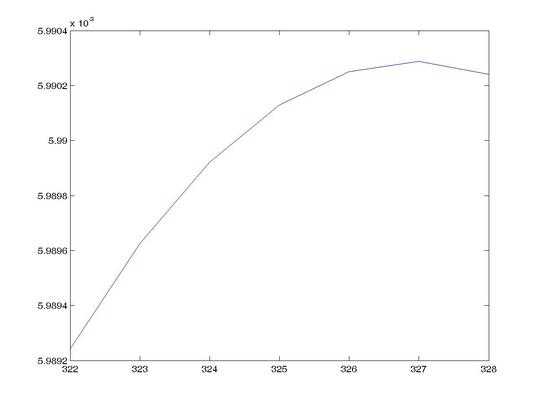

Let Hk denote the entropy of the string produced by the k-th program, and let H0 denote the entropy of the initial program's code. Also, let L0 denote the length of the initial program in characters. The sequence {Hk} is monotone as per the challenge requirements, and is bounded (because the number of existing characters is finite). Therefore it has a limit, H∞.

The score of a submission will be (H∞−H0)/L0:

- The numerator, H∞−H0, reflects to what extent your code "obeys" the law of increasing entropy over an infinite time span.

- The denonimator, L0, is the length of the initial code in characters (not in bytes).

The code with the highest score wins. Ties will be resolved in favour of the earliest submission/edit.

To compute the entropy of a string, you can use the JavaScript snippet (courtesy of @flawr and with corrections by @Dennis and @ETHproductions) at the end of this post.

If obtaining the limit H∞ is difficult in your specific case, you can use any lower bound, say H20, to compute the score (so you would use (H20−H0)/L0). But in any case, the infinite sequence of entropies must be strictly increasing.

Please include an explanation or short proof that the sequence of entropies is increasing, if that's not evident.

Example

In a fictional language, consider the code aabcab, which when run produces the string cdefgh, which when run produces cdefghi, which ...

The unique characters of the original code are a, b and c, with respective frequencies 3/6, 2/6 and 1/6. Its entropy is 1.4591. This is H0.

The string cdefgh has more entropy than aabcab. We can know this without computing it because for a given number of characters the entropy is maximized when all the frequencies are equal. Indeed, the entropy H1 is 2.5850.

The string cdefghi again has more entropy than the previous one. We can now without computing because adding a non-existing character always increases the entropy. Indeed, H2 is 2.8074.

If the next string were 42 the chain would be invalid, because H3 would be 1, smaller than 2.8074.

If, on the other hand, the sequence went on producing strings of increasing entropy with limit H∞ = 3, the score would be (3−1.4597)/6 = 0.2567.

Acknowledgments

Thanks to

@xnor for his help improving the challenge, and in particular for convincing me that infinite chains of increasing entropy obtained from iterated execution are indeed possible;

@flawr for several suggestions, including modifying the score function, and for writing the very useful snippet;

@Angs for pointing out an essential drawback in a previous definition of the score function;

@Dennis for a correction in the JavaScript snippet;

@ETHproductions for another correction in the snippet;

@PeterTaylor for a correction in the definition of entropy.

Snippet for calculating the entropy

function calculateEntropy() {

var input = document.getElementById("input").value; // fetch input as string

if (/[\uD800-\uDBFF]([^\uDC00-\uDFFF]|$)|([^\uD800-\uDBFF]|^)[\uDC00-\uDFFF]/.test(input)) {

document.getElementById("output").innerHTML = " invalid (unmatched surrogates)";

return;

}

var charArray = input.match(/[\uD800-\uDBFF][\uDC00-\uDFFF]|[^]/g);

var frequency = {};

for (var ind in charArray) {

frequency[charArray[ind]] = 0;

}

var total = 0;

for (var ind in charArray) {

frequency[charArray[ind]] ++;

total++;

}

var entropy = 0;

for (var key in frequency) {

var p = frequency[key] / total;

entropy -= p * Math.log2(p);

}

document.getElementById("output").innerHTML = " " + entropy;

};<button type="button" onClick="javascript:calculateEntropy();">calculate</button>Entropy: <span id="output">NaN</span>

<br/>

<textarea id="input">asdf asdf</textarea>

4“On this site we obey the laws of thermodynamics!” [citation-needed] – TuxCrafting – 2016-11-17T14:48:30.840

2

Here is the source :-)

– Luis Mendo – 2016-11-17T14:50:26.037Is there any constraint on the length of the output? Like having the same amount of characters than the input for instance. I find this unclear. – Osable – 2016-11-17T15:29:28.650

1I was hoping the question would be about "Hot" Network Questions. – mbomb007 – 2016-11-17T15:30:06.083

@Osable The outputs can have any length you want – Luis Mendo – 2016-11-17T15:30:29.717

1I was wondering... is it actually possible to infinitely strictly increase the entropy? If I take the output in its unsigned binary form, it's basically a sequence of integers in range [0,255]. If the entropy is optimal when all the characters are different (just an assumption), wouldn't it imply that the string with largest entropy is 256 bytes long? It's far from being infinite. Or my assumption is wrong. – Osable – 2016-11-17T16:38:29.263

2@Osable Attach a copy of that string to itself and the entropy will be the same. Then remove one char and it will be slightly smaller. Invert the process and you have increased the entropy. If you manage to never reach the maximum entropy, you can keep on increasing forever – Luis Mendo – 2016-11-17T16:43:29.090

Hmm it's that last sentence that I did not figure out first. Now that's clear. I thought we had to produce something with an 'infinite' entropy. – Osable – 2016-11-17T16:47:31.220

1@Osable Yes, quite a few people seem to be confused by that... :( But the challenge says "strictly increasing sequence" (not "strictly increasing sequence that tends to infinity"). And the example has limit entropy of 3 – Luis Mendo – 2016-11-17T16:49:32.770

@LuisMendo Yes because we went a little too fast. Anyway can the input value be balanced (I mean, all characters have the same frequency)? – Osable – 2016-11-17T17:00:40.130

@Osable There On this challenge there is no input really. If you mean the initial string (initial program), it can be whatever you want – Luis Mendo – 2016-11-17T17:11:33.763

@Osable there's a concrete example now, if that helps.

– trichoplax – 2016-11-17T23:14:21.237I assume that that "pseudorandom" is not, for the purposes of this challenge, "deterministic"? – Nissa – 2016-11-18T02:05:35.250

@Stephen The sequence of strings has to be the same for every chain of executions. Pseudorandom is acceptable if you can achieve that, for example using always the same seed – Luis Mendo – 2016-11-18T09:00:40.437