C, with Lab color space and improved dithering

Did I say I was done? I lied. I think the algorithm in my other solution is the best out there, but Perl just isn't fast enough for number crunching tasks, so I reimplemented my work in C. It now runs all of the images in this post, at a higher quality than the original at about 3 minutes per image, and slightly lower quality (0.5% level) runs in 20-30 seconds per image. Basically all of the work is done with ImageMagick, and the dithering is done using ImageMagick's cubic spline interpolation, which gives a better / less patterned result.

Code

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <math.h>

#include <unistd.h>

#include <wand/MagickWand.h>

#define ThrowWandException(wand) \

{ \

char \

*description; \

\

ExceptionType \

severity; \

\

description=MagickGetException(wand,&severity); \

(void) fprintf(stderr,"%s %s %lu %s\n",GetMagickModule(),description); \

description=(char *) MagickRelinquishMemory(description); \

abort(); \

exit(-1); \

}

int width, height; /* Target image size */

MagickWand *source_wand, *target_wand, *img_wand, *target_lab_wand, *img_lab_wand;

PixelPacket *source_pixels, *target_pixels, *img_pixels, *target_lab_pixels, *img_lab_pixels;

Image *img, *img_lab, *target, *target_lab;

CacheView *img_lab_view, *target_lab_view;

ExceptionInfo *e;

MagickWand *load_image(const char *filename) {

MagickWand *img = NewMagickWand();

if (!MagickReadImage(img, filename)) {

ThrowWandException(img);

}

return img;

}

PixelPacket *get_pixels(MagickWand *wand) {

PixelPacket *ret = GetAuthenticPixels(

GetImageFromMagickWand(wand), 0, 0,

MagickGetImageWidth(wand), MagickGetImageHeight(wand), e);

CatchException(e);

return ret;

}

void sync_pixels(MagickWand *wand) {

SyncAuthenticPixels(GetImageFromMagickWand(wand), e);

CatchException(e);

}

MagickWand *transfer_pixels() {

if (MagickGetImageWidth(source_wand) * MagickGetImageHeight(source_wand)

!= MagickGetImageWidth(target_wand) * MagickGetImageHeight(target_wand)) {

perror("size mismtch");

}

MagickWand *img_wand = CloneMagickWand(target_wand);

img_pixels = get_pixels(img_wand);

memcpy(img_pixels, source_pixels,

MagickGetImageWidth(img_wand) * MagickGetImageHeight(img_wand) * sizeof(PixelPacket));

sync_pixels(img_wand);

return img_wand;

}

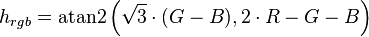

MagickWand *image_to_lab(MagickWand *img) {

MagickWand *lab = CloneMagickWand(img);

TransformImageColorspace(GetImageFromMagickWand(lab), LabColorspace);

return lab;

}

int lab_distance(PixelPacket *a, PixelPacket *b) {

int l_diff = (GetPixelL(a) - GetPixelL(b)) / 256,

a_diff = (GetPixela(a) - GetPixela(b)) / 256,

b_diff = (GetPixelb(a) - GetPixelb(b)) / 256;

return (l_diff * l_diff + a_diff * a_diff + b_diff * b_diff);

}

int should_swap(int x1, int x2, int y1, int y2) {

int dist = lab_distance(&img_lab_pixels[width * y1 + x1], &target_lab_pixels[width * y1 + x1])

+ lab_distance(&img_lab_pixels[width * y2 + x2], &target_lab_pixels[width * y2 + x2]);

int swapped_dist = lab_distance(&img_lab_pixels[width * y2 + x2], &target_lab_pixels[width * y1 + x1])

+ lab_distance(&img_lab_pixels[width * y1 + x1], &target_lab_pixels[width * y2 + x2]);

return swapped_dist < dist;

}

void pixel_multiply_add(MagickPixelPacket *dest, PixelPacket *src, double mult) {

dest->red += (double)GetPixelRed(src) * mult;

dest->green += ((double)GetPixelGreen(src) - 32768) * mult;

dest->blue += ((double)GetPixelBlue(src) - 32768) * mult;

}

#define min(x,y) (((x) < (y)) ? (x) : (y))

#define max(x,y) (((x) > (y)) ? (x) : (y))

double mpp_distance(MagickPixelPacket *a, MagickPixelPacket *b) {

double l_diff = QuantumScale * (a->red - b->red),

a_diff = QuantumScale * (a->green - b->green),

b_diff = QuantumScale * (a->blue - b->blue);

return (l_diff * l_diff + a_diff * a_diff + b_diff * b_diff);

}

void do_swap(PixelPacket *pix, int x1, int x2, int y1, int y2) {

PixelPacket tmp = pix[width * y1 + x1];

pix[width * y1 + x1] = pix[width * y2 + x2];

pix[width * y2 + x2] = tmp;

}

int should_swap_dither(double detail, int x1, int x2, int y1, int y2) {

// const InterpolatePixelMethod method = Average9InterpolatePixel;

const InterpolatePixelMethod method = SplineInterpolatePixel;

MagickPixelPacket img1, img2, img1s, img2s, target1, target2;

GetMagickPixelPacket(img, &img1);

GetMagickPixelPacket(img, &img2);

GetMagickPixelPacket(img, &img1s);

GetMagickPixelPacket(img, &img2s);

GetMagickPixelPacket(target, &target1);

GetMagickPixelPacket(target, &target2);

InterpolateMagickPixelPacket(img, img_lab_view, method, x1, y1, &img1, e);

InterpolateMagickPixelPacket(img, img_lab_view, method, x2, y2, &img2, e);

InterpolateMagickPixelPacket(target, target_lab_view, method, x1, y1, &target1, e);

InterpolateMagickPixelPacket(target, target_lab_view, method, x2, y2, &target2, e);

do_swap(img_lab_pixels, x1, x2, y1, y2);

// sync_pixels(img_wand);

InterpolateMagickPixelPacket(img, img_lab_view, method, x1, y1, &img1s, e);

InterpolateMagickPixelPacket(img, img_lab_view, method, x2, y2, &img2s, e);

do_swap(img_lab_pixels, x1, x2, y1, y2);

// sync_pixels(img_wand);

pixel_multiply_add(&img1, &img_lab_pixels[width * y1 + x1], detail);

pixel_multiply_add(&img2, &img_lab_pixels[width * y2 + x2], detail);

pixel_multiply_add(&img1s, &img_lab_pixels[width * y2 + x2], detail);

pixel_multiply_add(&img2s, &img_lab_pixels[width * y1 + x1], detail);

pixel_multiply_add(&target1, &target_lab_pixels[width * y1 + x1], detail);

pixel_multiply_add(&target2, &target_lab_pixels[width * y2 + x2], detail);

double dist = mpp_distance(&img1, &target1)

+ mpp_distance(&img2, &target2);

double swapped_dist = mpp_distance(&img1s, &target1)

+ mpp_distance(&img2s, &target2);

return swapped_dist + 1.0e-4 < dist;

}

int main(int argc, char *argv[]) {

if (argc != 7) {

fprintf(stderr, "Usage: %s source.png target.png dest nodither_pct dither_pct detail\n", argv[0]);

return 1;

}

char *source_filename = argv[1];

char *target_filename = argv[2];

char *dest = argv[3];

double nodither_pct = atof(argv[4]);

double dither_pct = atof(argv[5]);

double detail = atof(argv[6]) - 1;

const int SWAPS_PER_LOOP = 1000000;

int nodither_limit = ceil(SWAPS_PER_LOOP * nodither_pct / 100);

int dither_limit = ceil(SWAPS_PER_LOOP * dither_pct / 100);

int dither = 0, frame = 0;

char outfile[256], cmdline[1024];

sprintf(outfile, "out/%s.png", dest);

MagickWandGenesis();

e = AcquireExceptionInfo();

source_wand = load_image(source_filename);

source_pixels = get_pixels(source_wand);

target_wand = load_image(target_filename);

target_pixels = get_pixels(target_wand);

img_wand = transfer_pixels();

img_pixels = get_pixels(img_wand);

target_lab_wand = image_to_lab(target_wand);

target_lab_pixels = get_pixels(target_lab_wand);

img_lab_wand = image_to_lab(img_wand);

img_lab_pixels = get_pixels(img_lab_wand);

img = GetImageFromMagickWand(img_lab_wand);

target = GetImageFromMagickWand(target_lab_wand);

img_lab_view = AcquireAuthenticCacheView(img, e);

target_lab_view = AcquireAuthenticCacheView(target,e);

CatchException(e);

width = MagickGetImageWidth(img_wand);

height = MagickGetImageHeight(img_wand);

while (1) {

int swaps_made = 0;

for (int n = 0 ; n < SWAPS_PER_LOOP ; n++) {

int x1 = rand() % width,

x2 = rand() % width,

y1 = rand() % height,

y2 = rand() % height;

int swap = dither ?

should_swap_dither(detail, x1, x2, y1, y2)

: should_swap(x1, x2, y1, y2);

if (swap) {

do_swap(img_pixels, x1, x2, y1, y2);

do_swap(img_lab_pixels, x1, x2, y1, y2);

swaps_made ++;

}

}

sync_pixels(img_wand);

if (!MagickWriteImages(img_wand, outfile, MagickTrue)) {

ThrowWandException(img_wand);

}

img_pixels = get_pixels(img_wand);

sprintf(cmdline, "cp out/%s.png anim/%s/%05i.png", dest, dest, frame++);

system(cmdline);

if (!dither && swaps_made < nodither_limit) {

sprintf(cmdline, "cp out/%s.png out/%s-nodither.png", dest, dest);

system(cmdline);

dither = 1;

} else if (dither && swaps_made < dither_limit)

break;

}

return 0;

}

Compile with

gcc -std=gnu99 -O3 -march=native -ffast-math \

-o transfer `pkg-config --cflags MagickWand` \

transfer.c `pkg-config --libs MagickWand` -lm

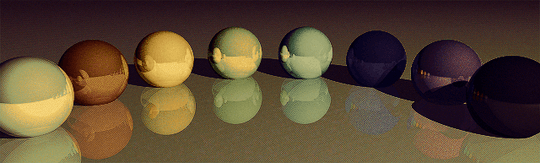

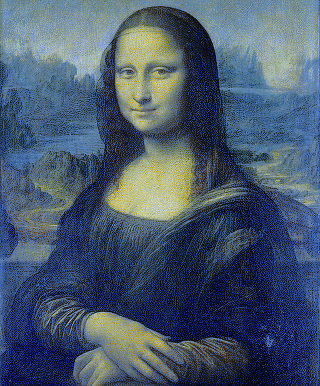

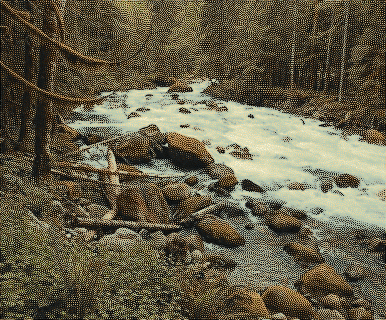

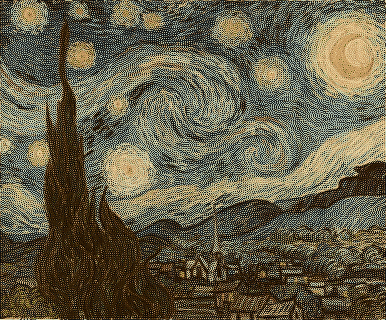

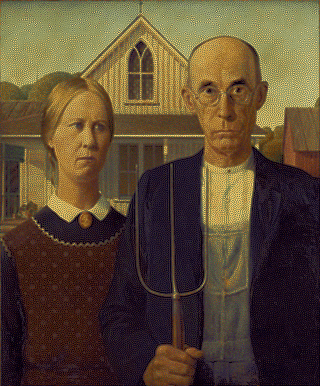

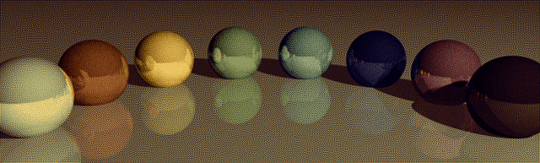

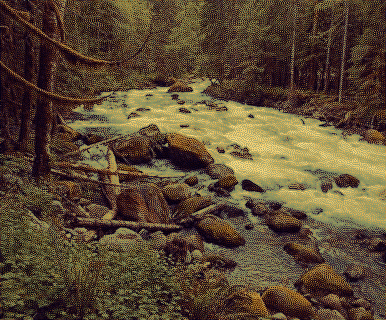

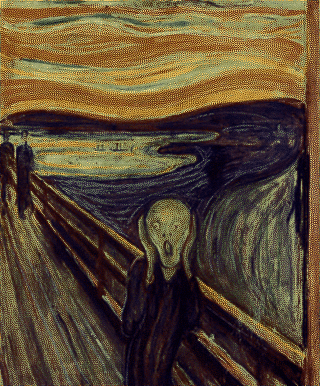

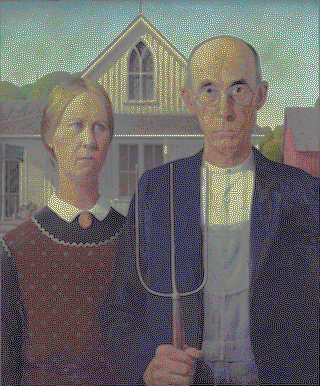

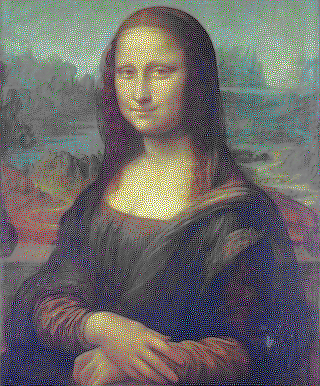

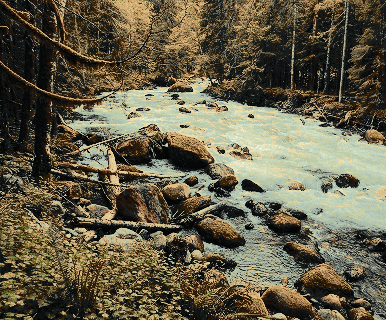

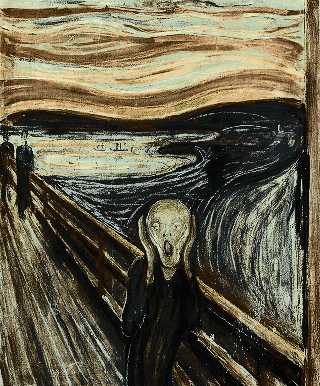

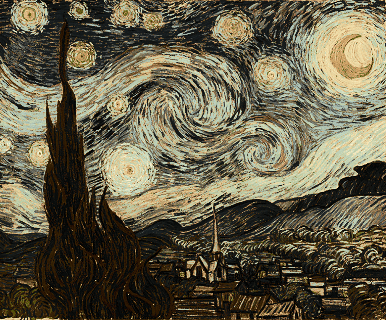

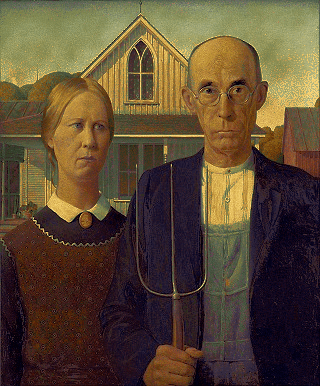

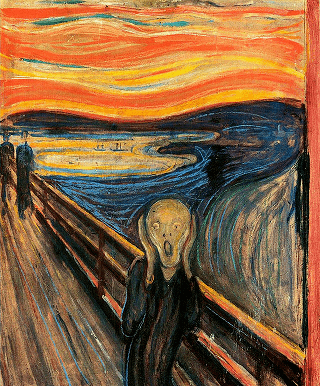

Results

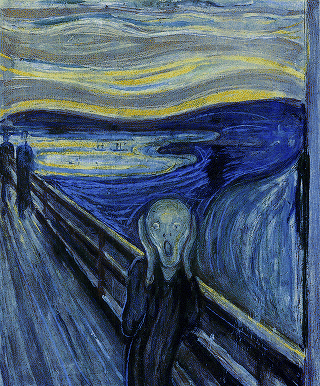

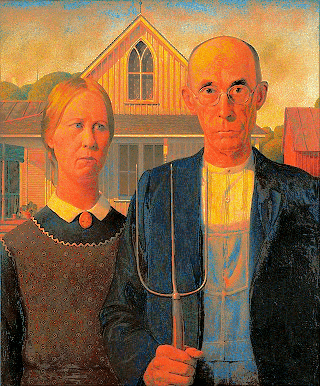

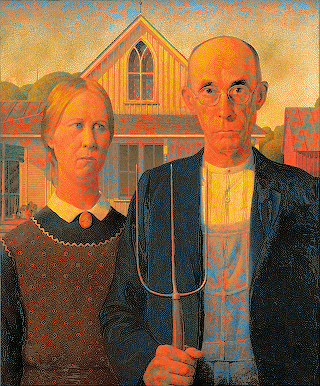

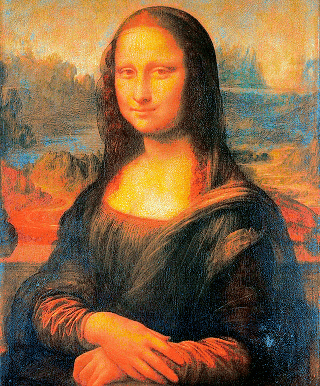

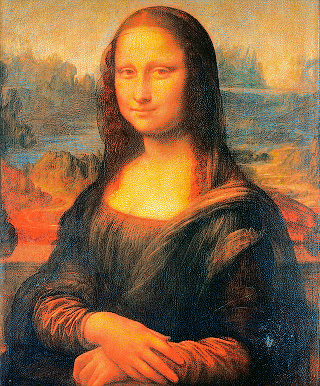

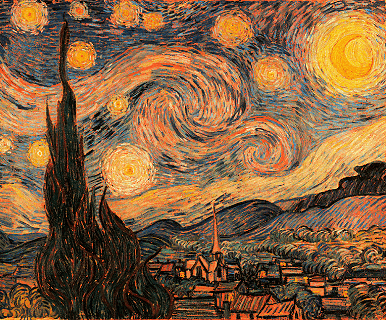

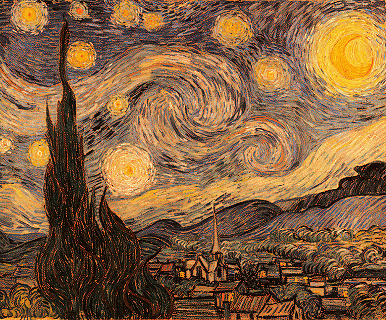

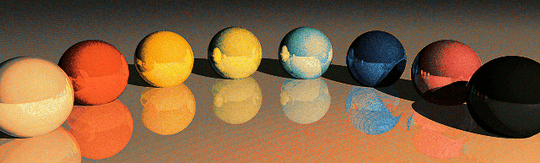

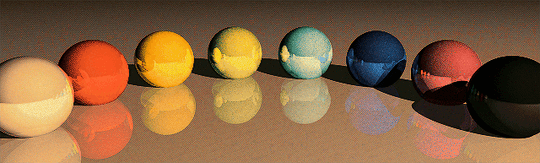

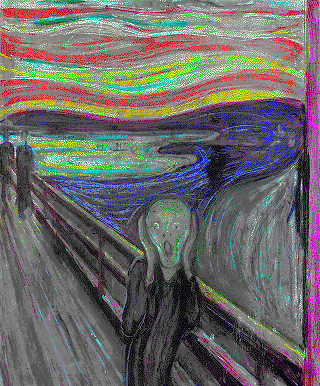

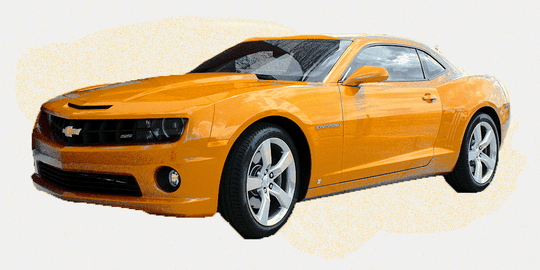

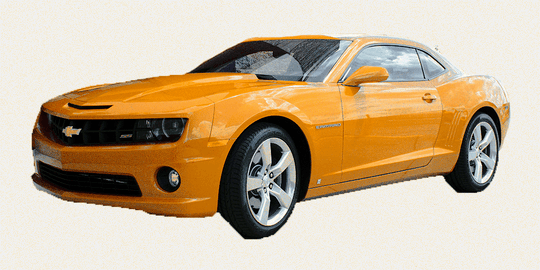

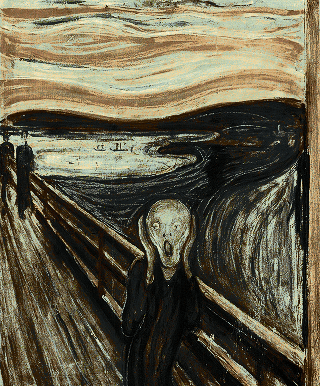

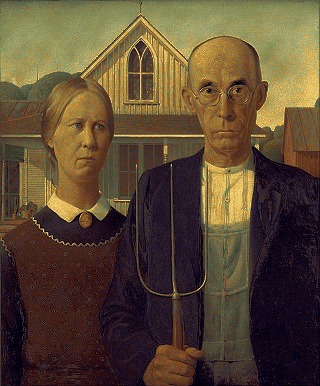

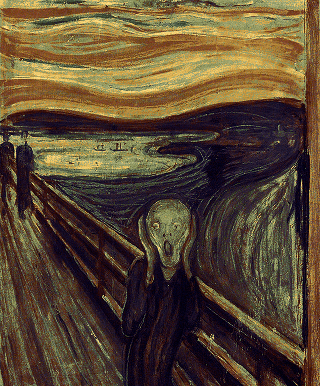

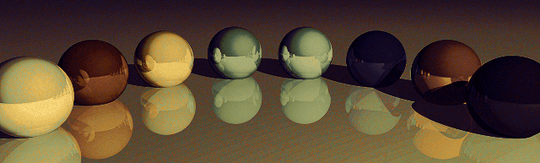

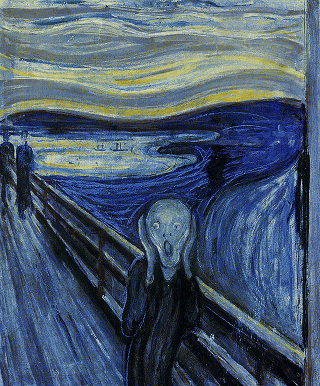

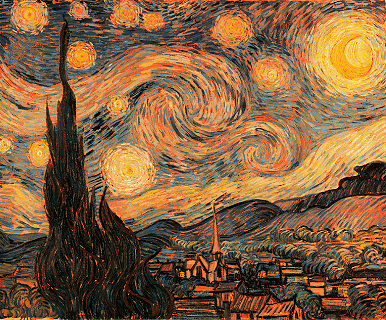

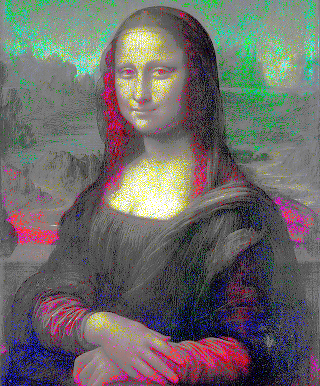

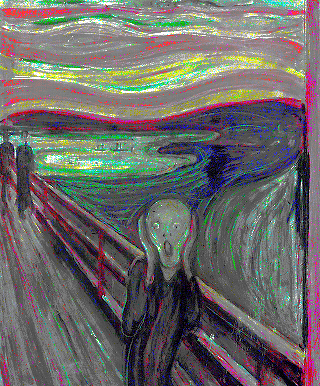

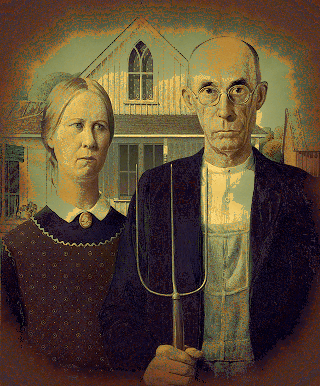

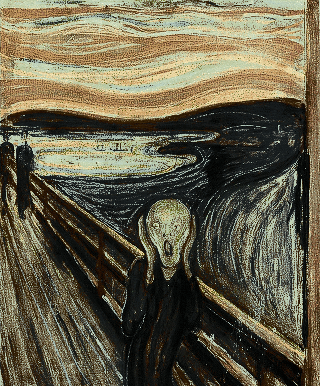

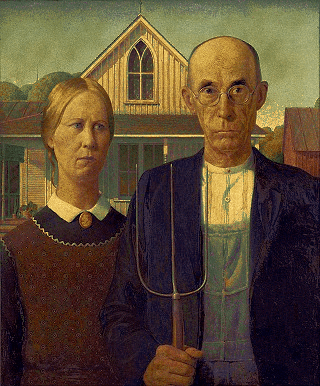

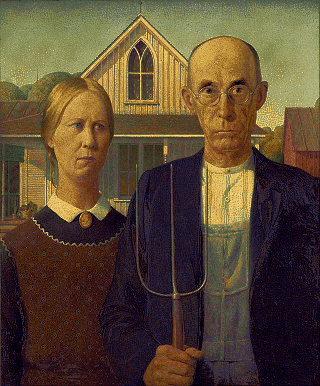

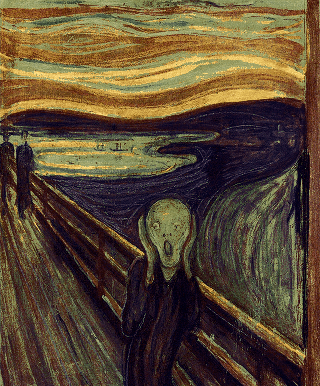

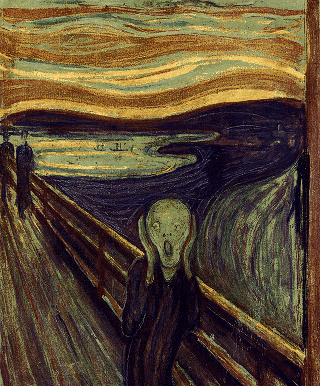

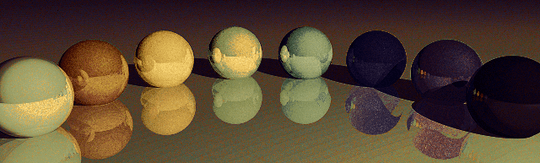

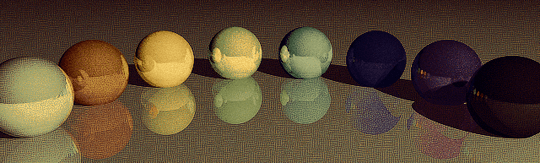

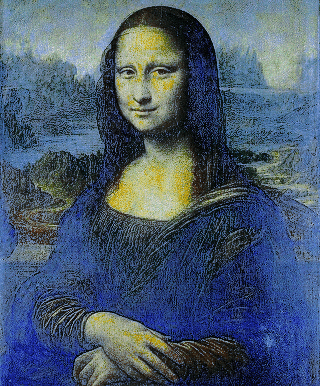

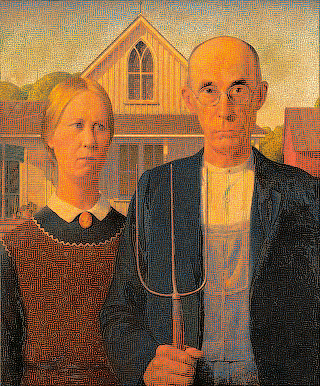

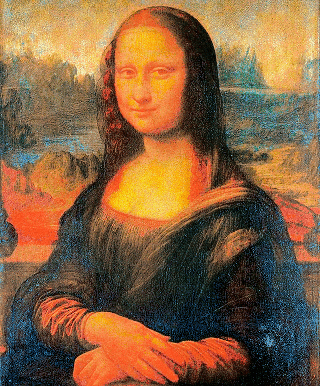

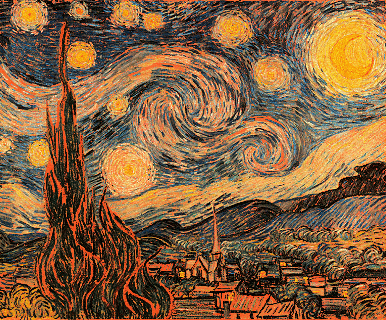

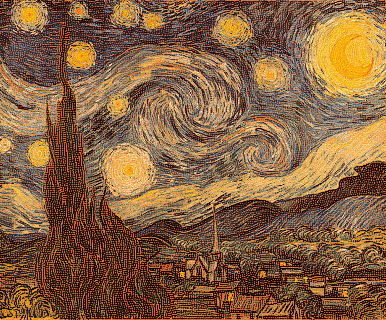

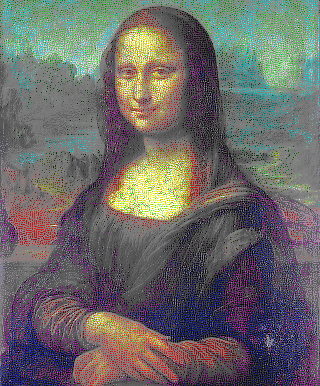

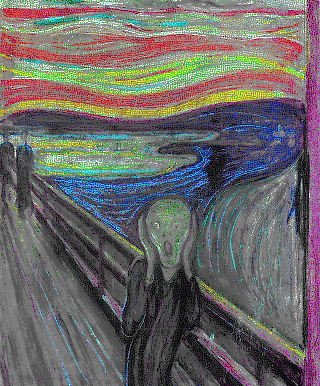

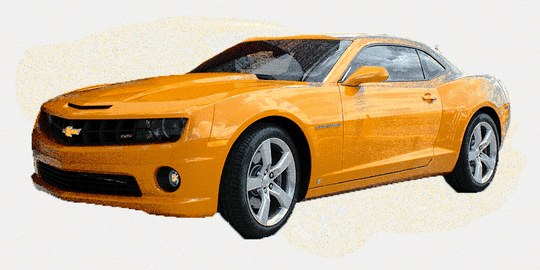

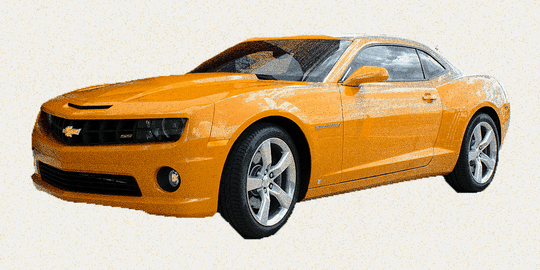

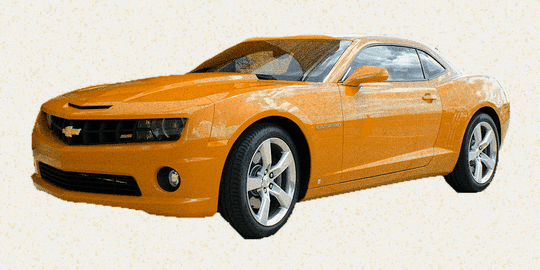

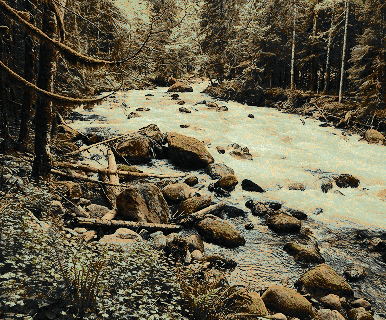

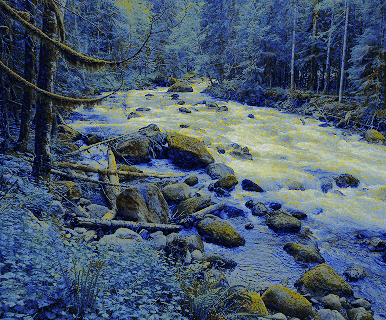

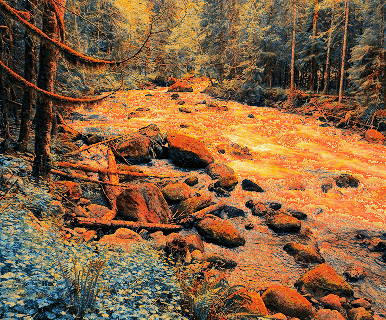

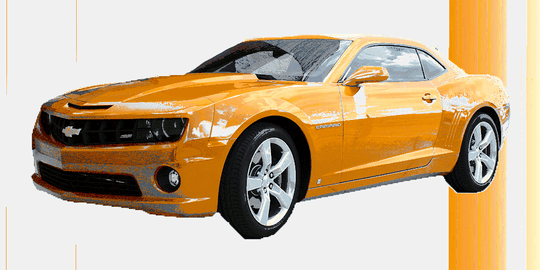

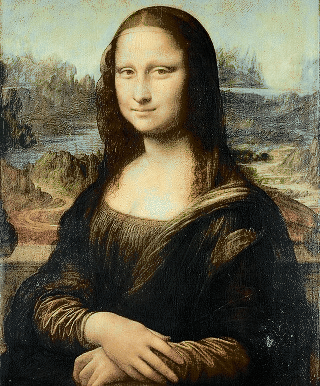

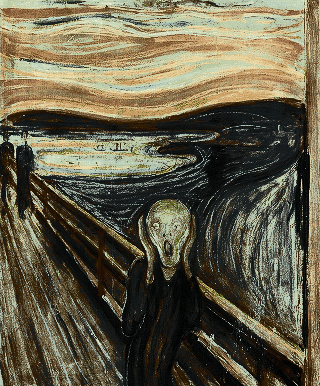

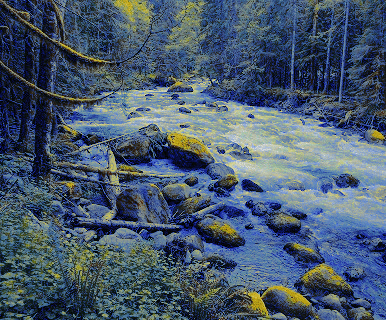

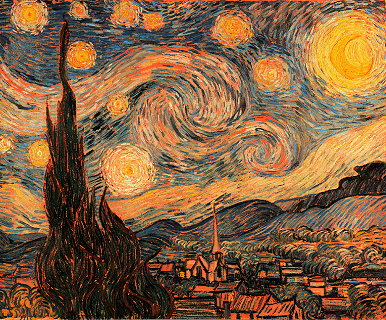

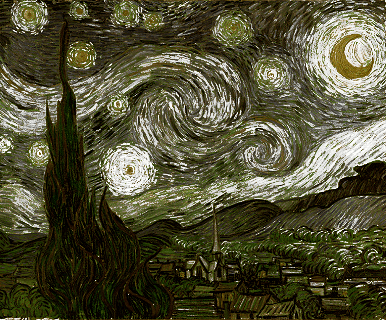

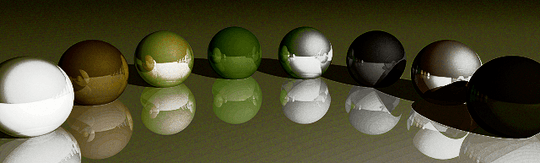

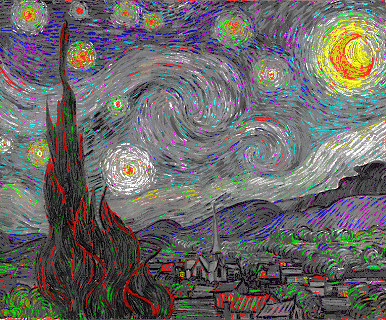

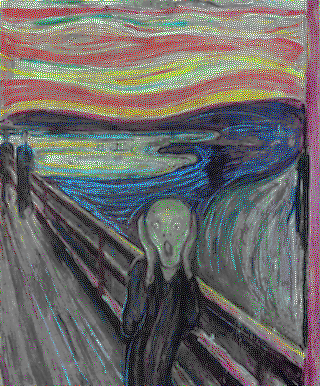

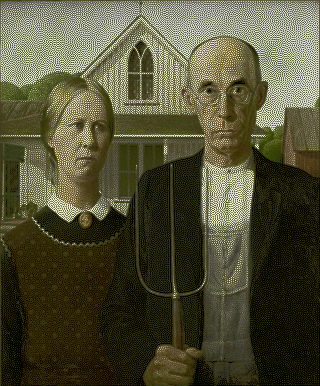

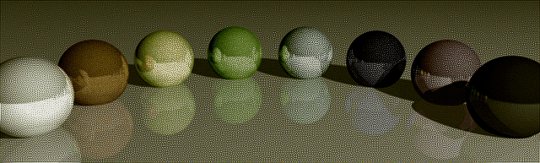

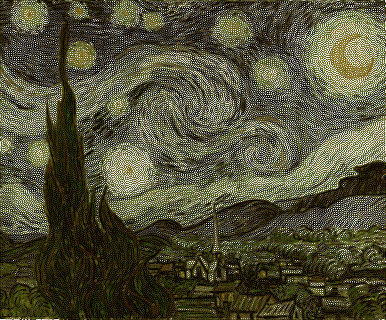

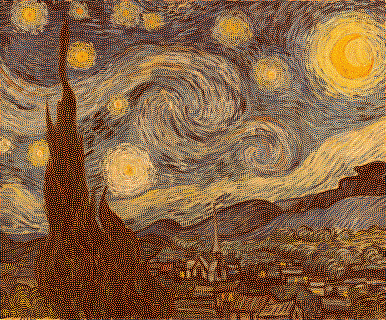

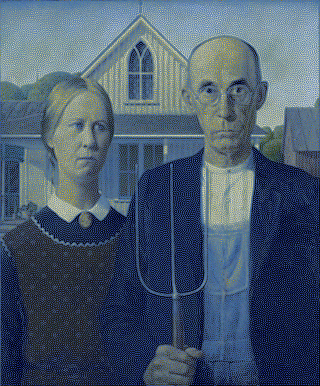

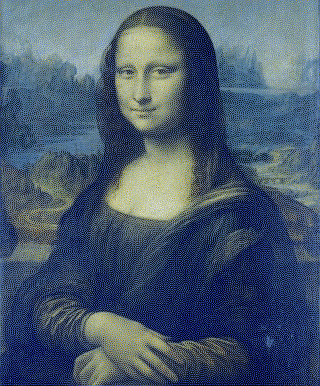

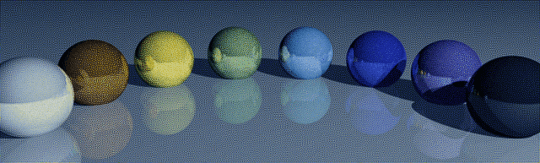

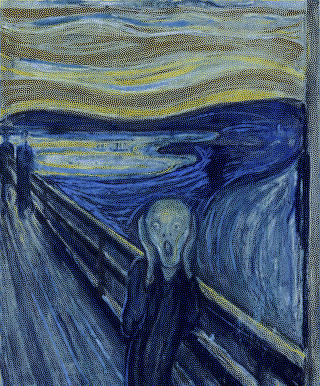

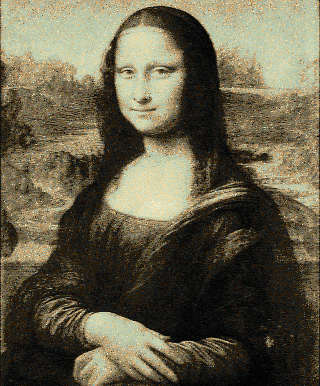

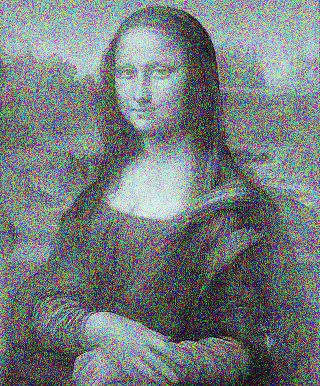

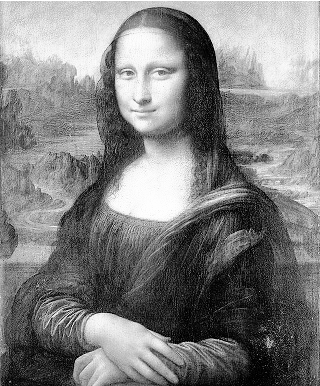

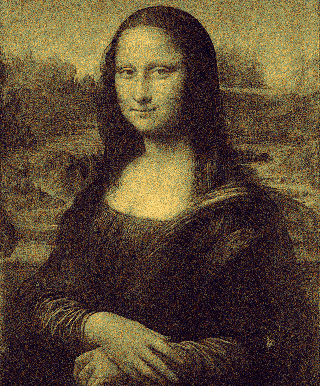

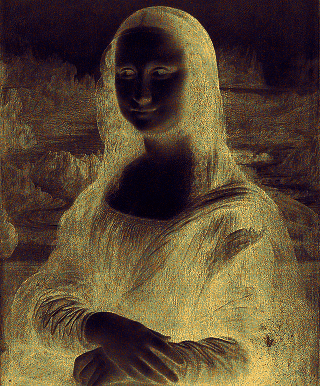

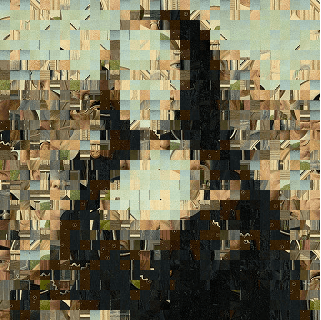

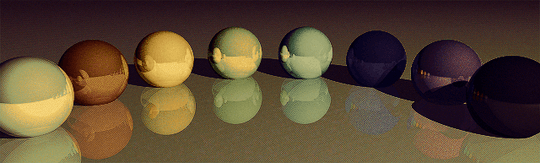

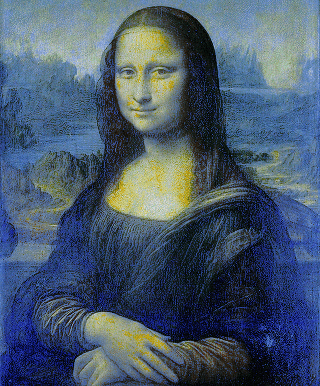

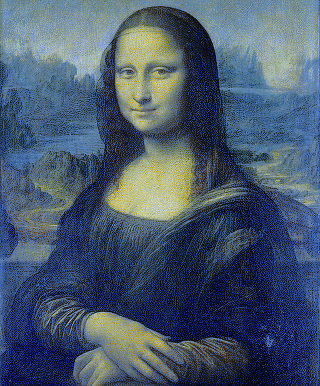

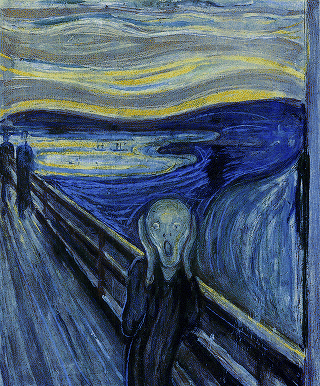

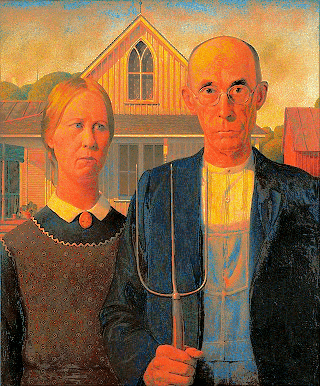

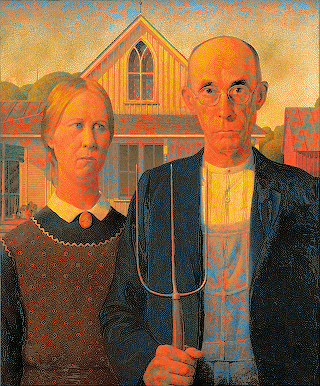

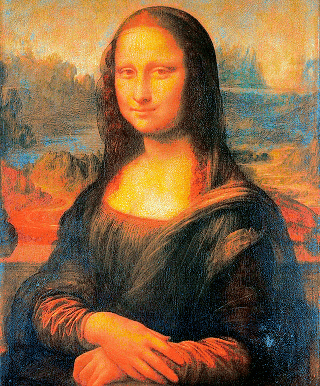

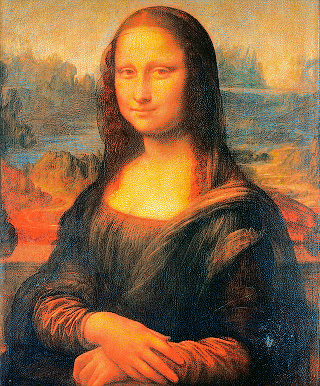

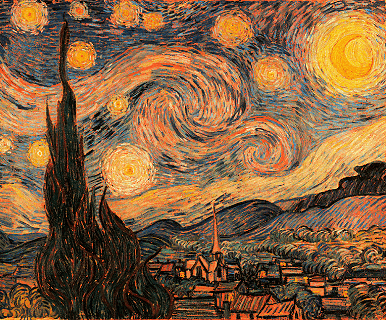

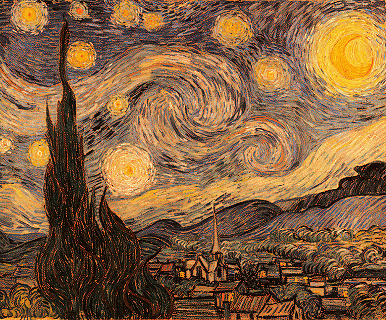

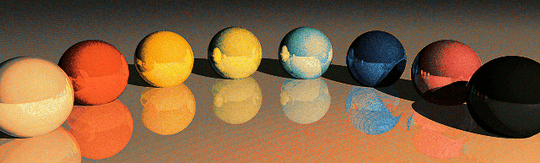

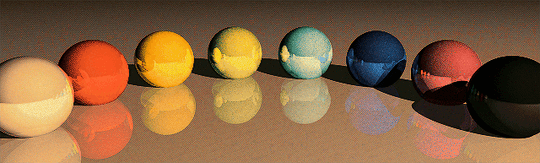

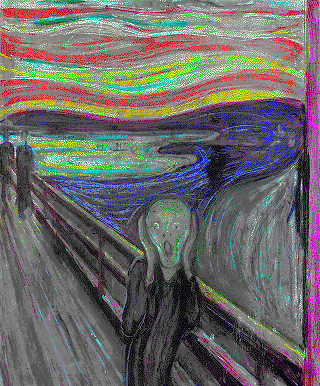

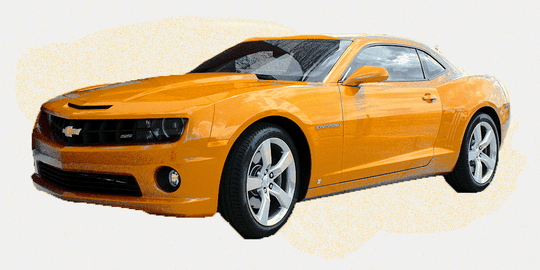

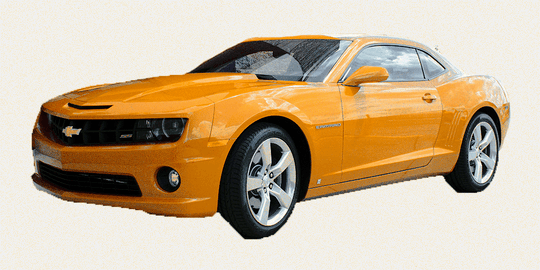

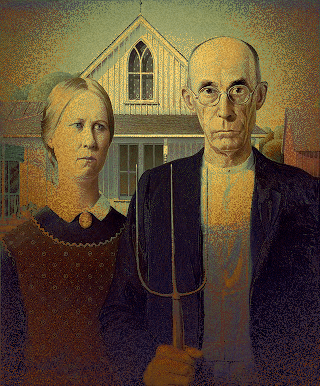

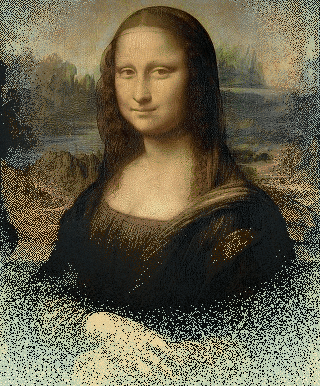

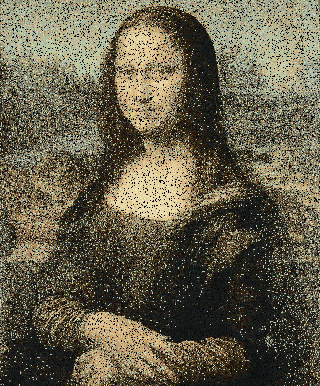

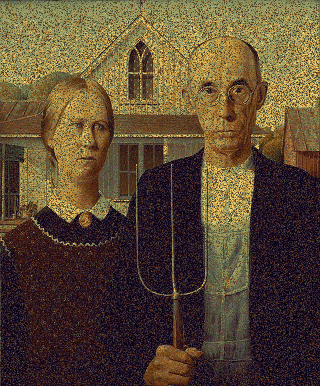

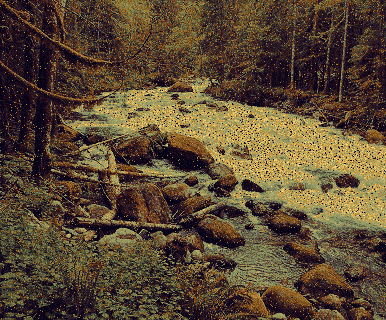

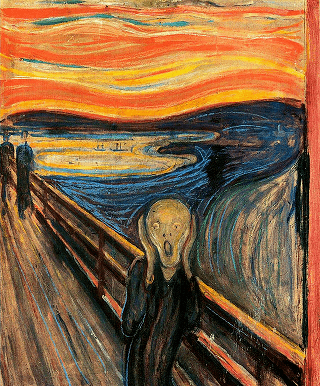

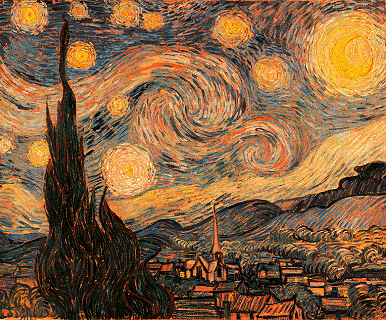

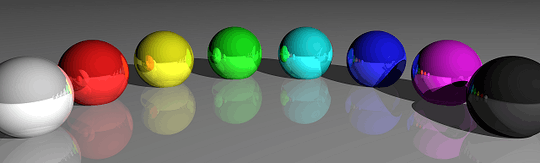

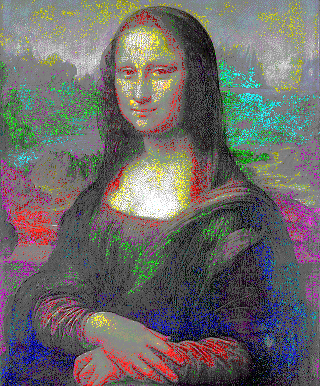

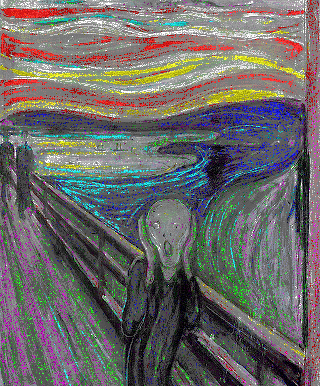

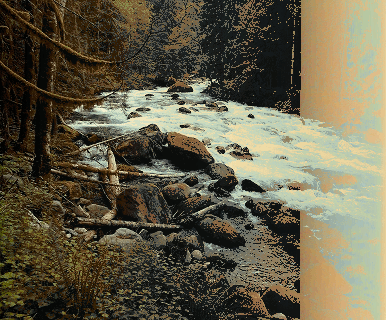

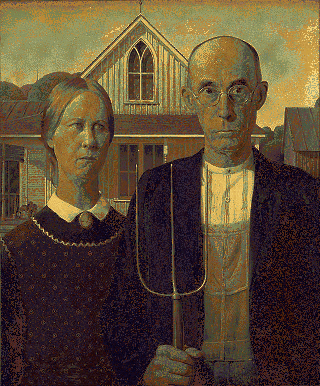

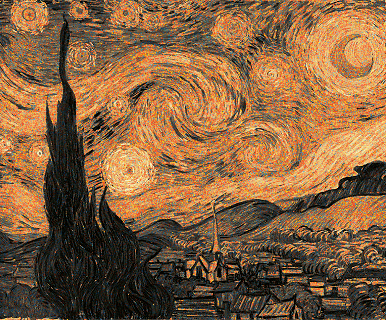

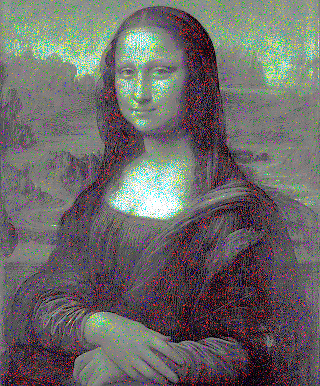

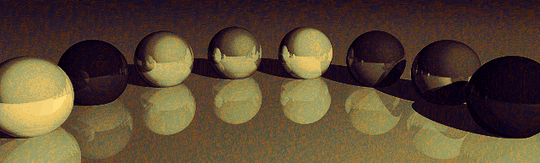

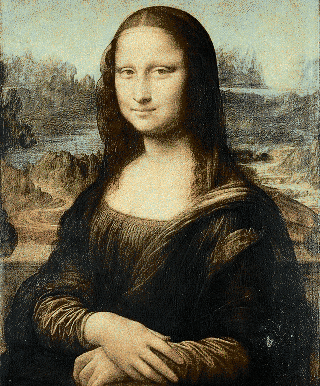

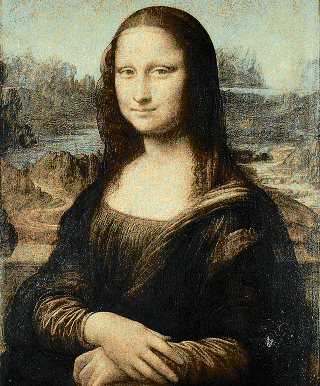

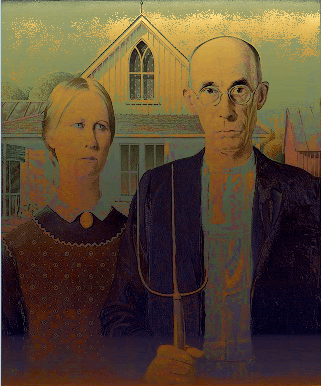

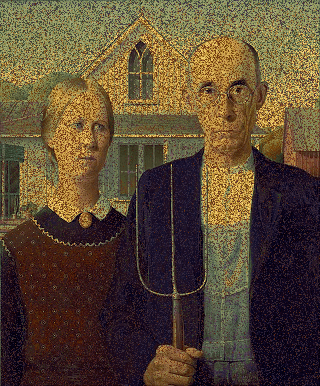

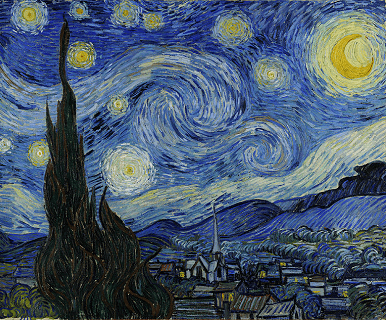

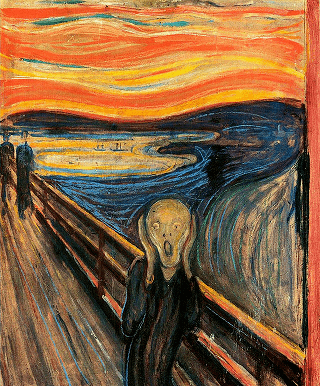

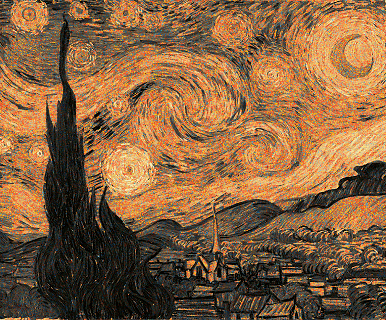

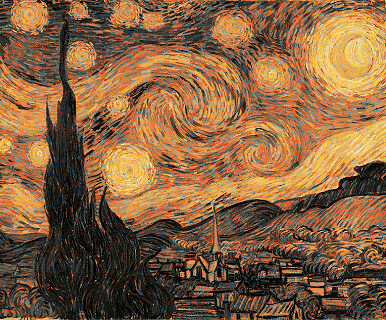

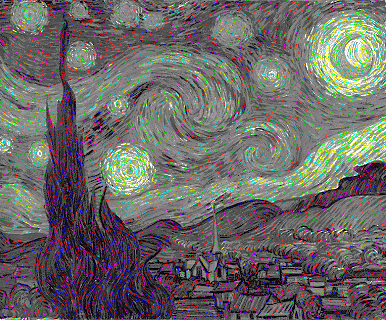

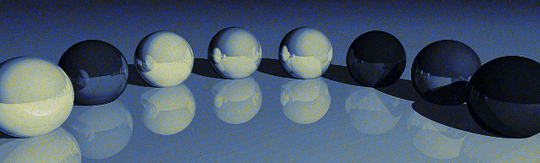

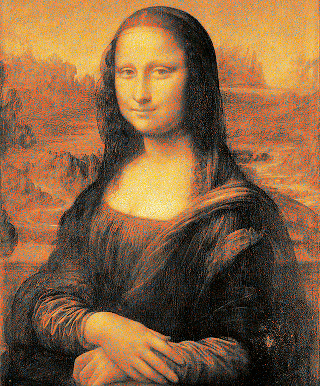

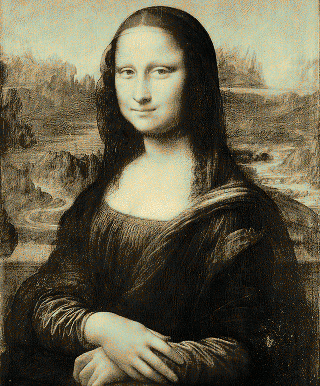

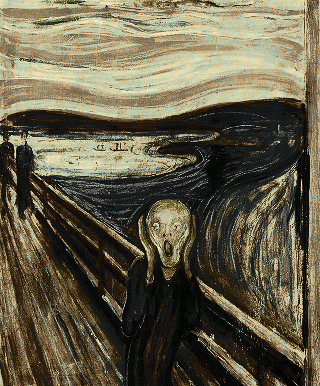

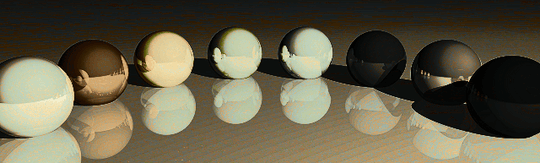

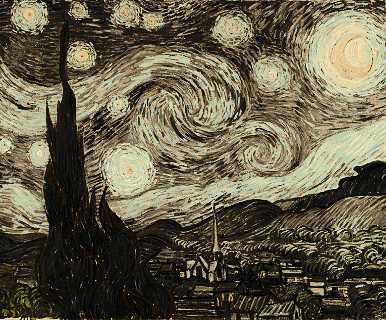

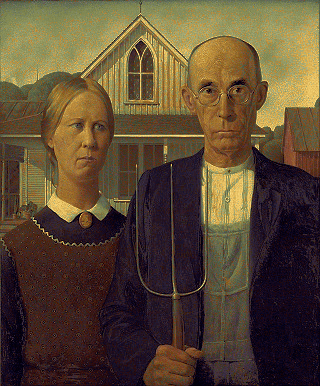

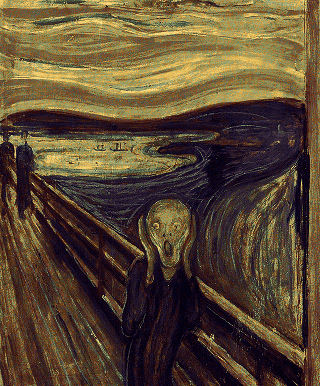

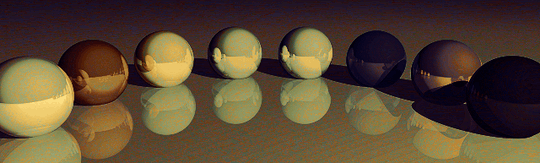

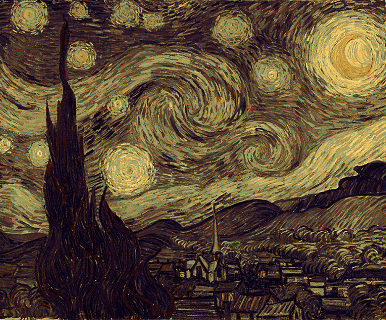

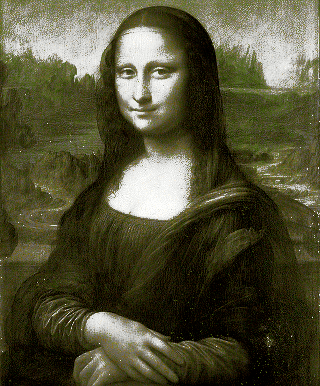

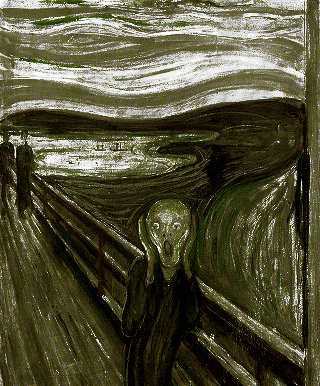

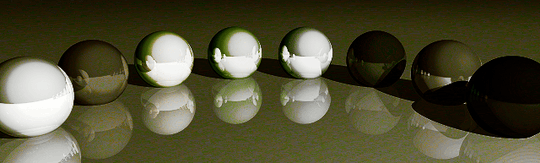

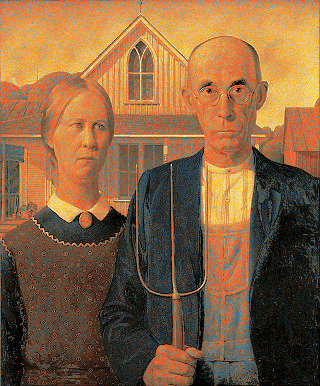

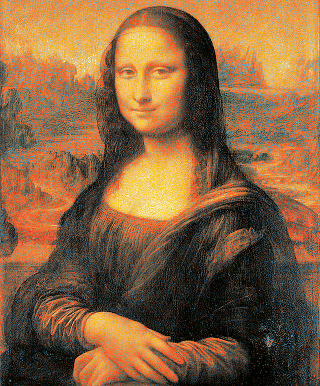

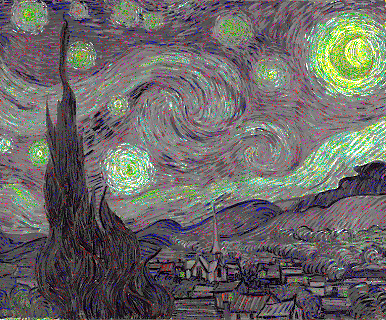

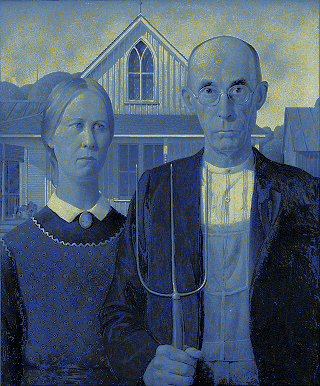

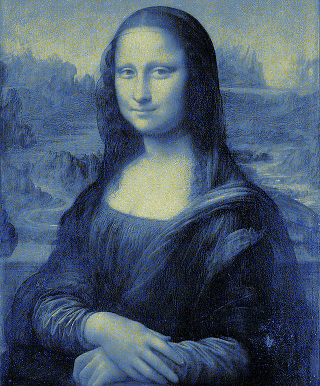

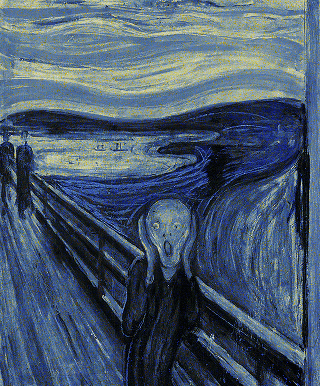

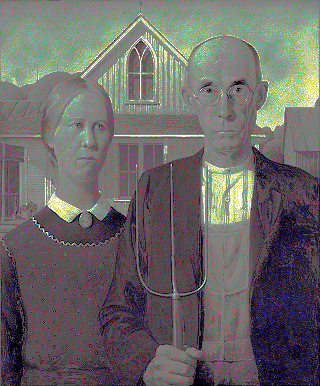

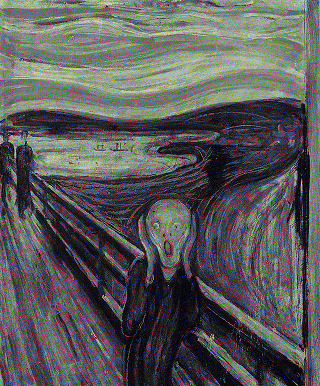

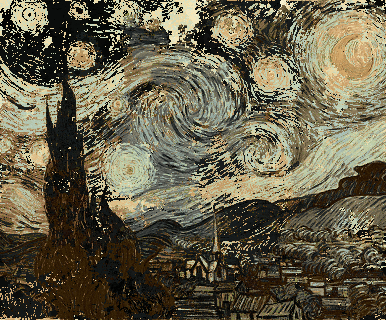

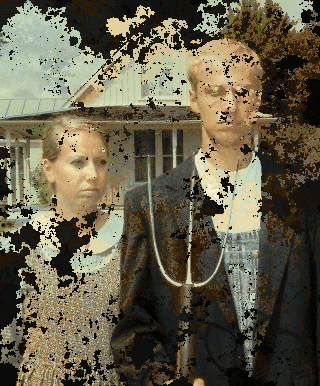

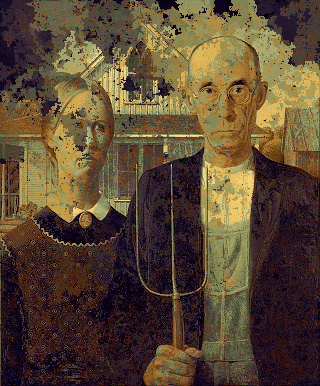

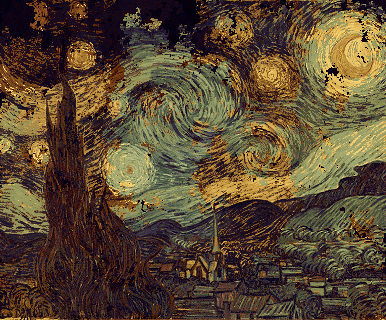

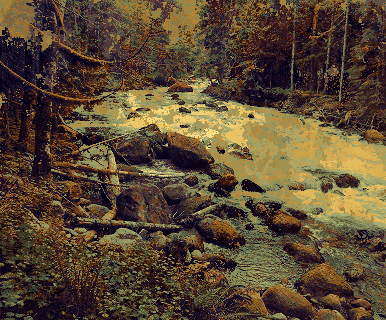

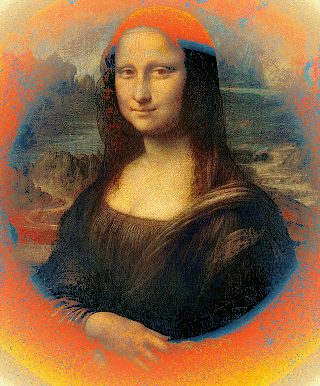

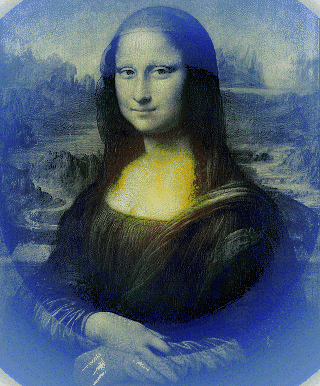

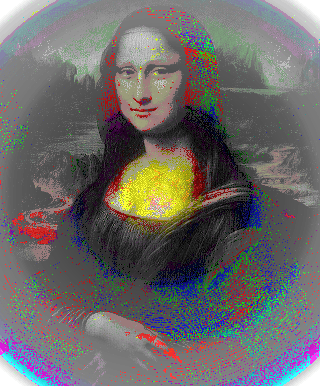

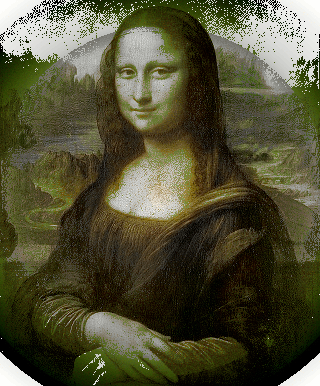

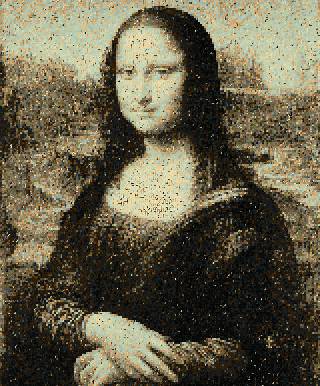

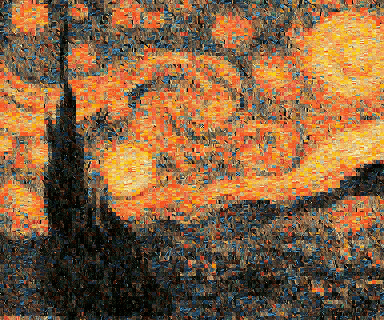

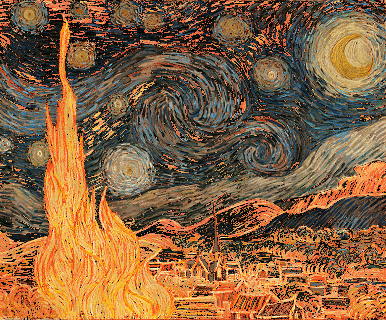

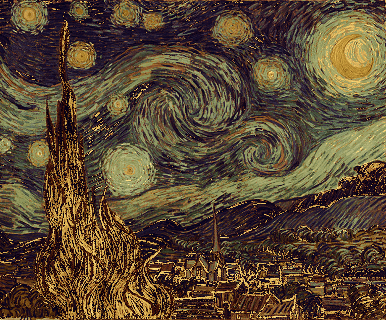

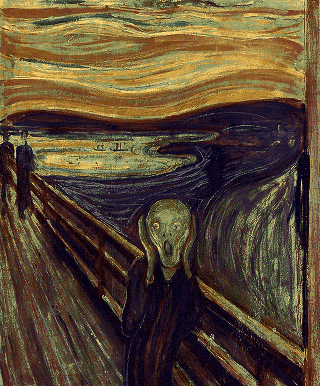

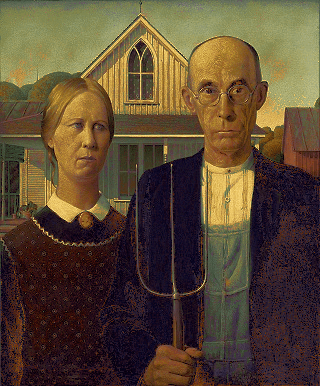

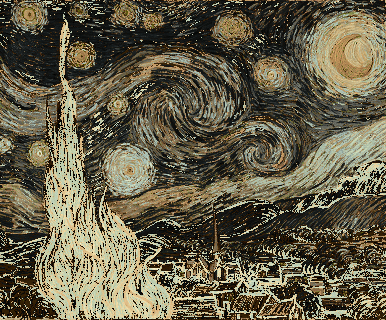

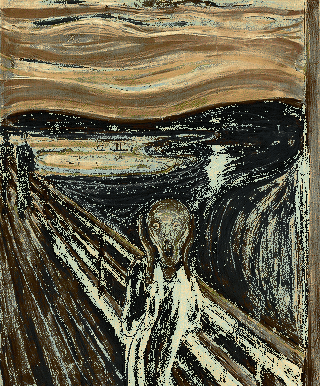

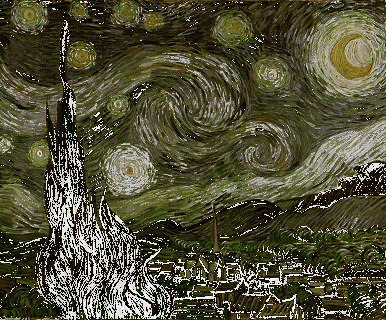

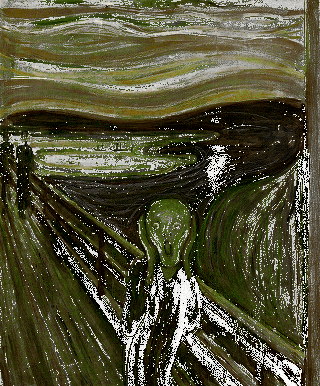

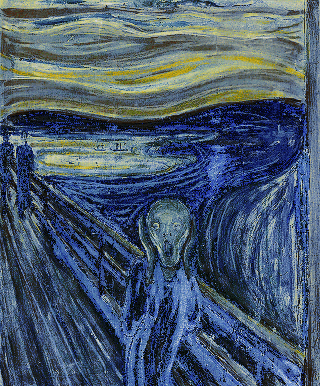

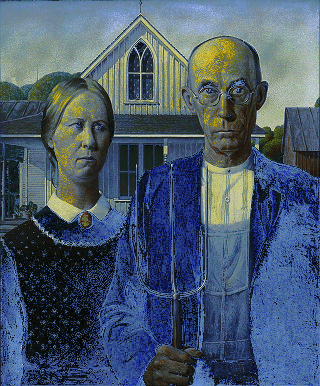

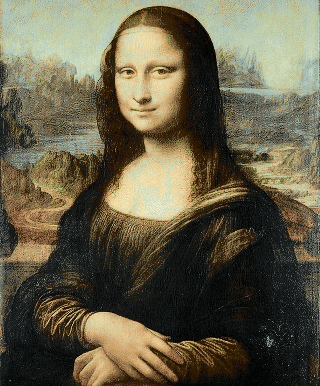

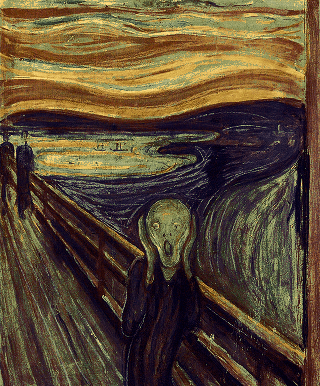

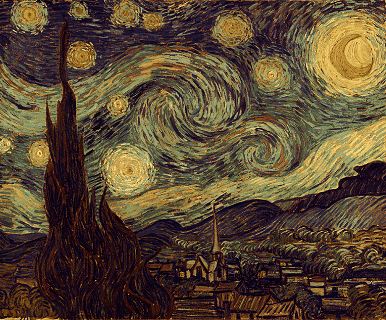

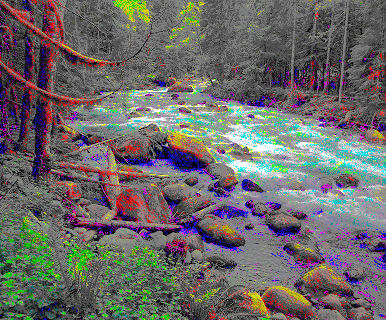

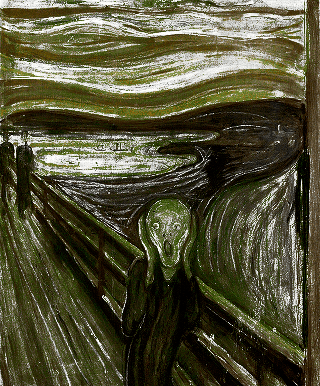

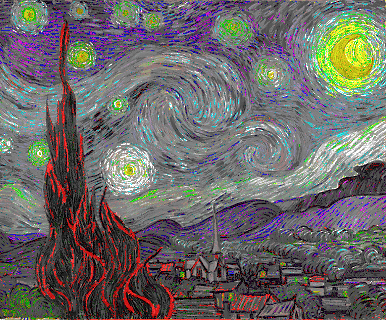

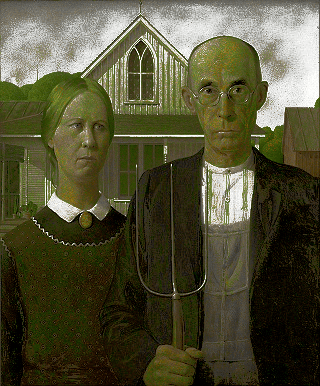

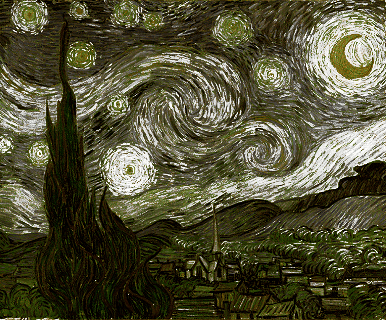

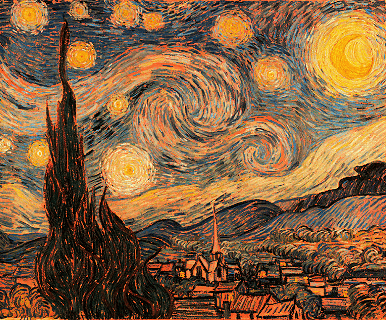

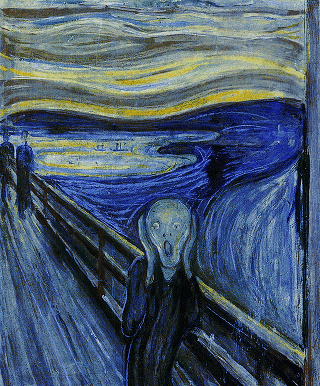

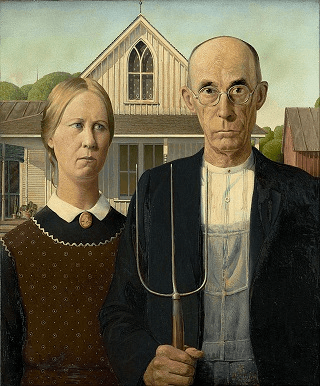

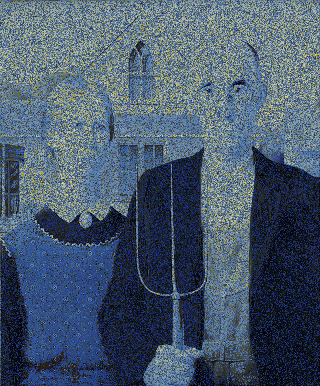

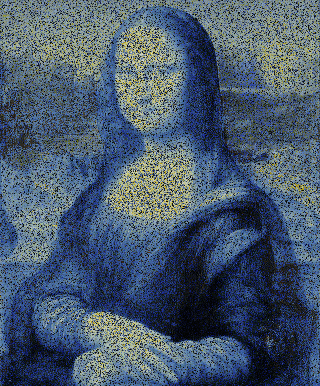

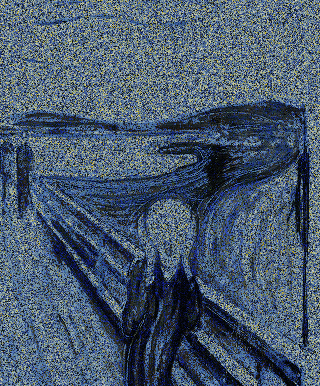

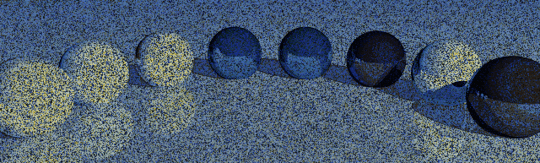

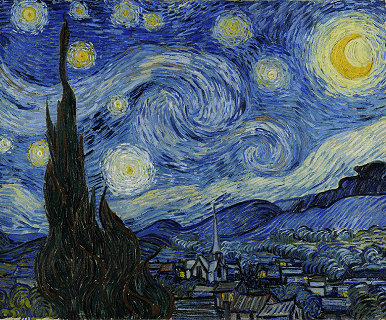

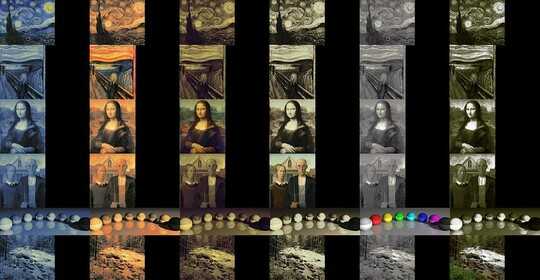

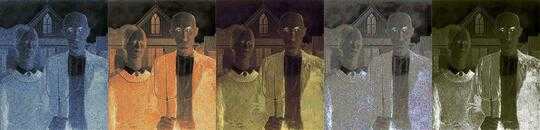

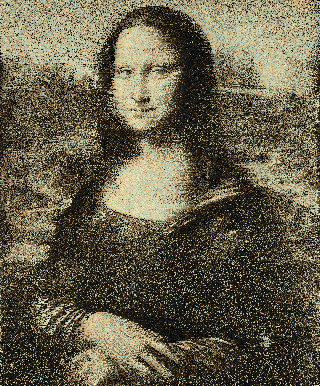

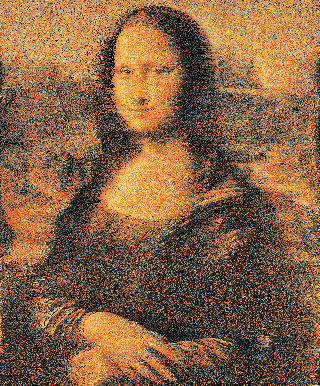

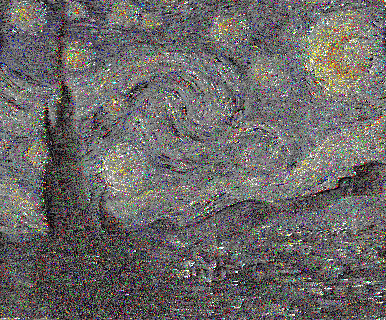

Mostly the same as the Perl version, just slightly better, but there are a few exceptions. Dithering is less noticeable in general. Scream -> Starry Night doesn't have the "flaming mountain" effect, and the Camaro looks less glitchy with the gray pixels. I think the Perl version's colorspace code has a bug with low-saturation pixels.

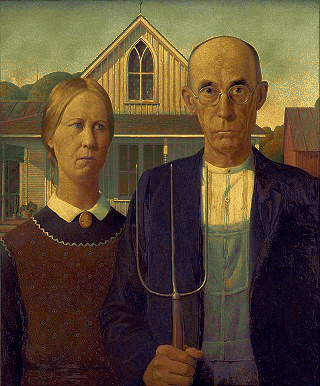

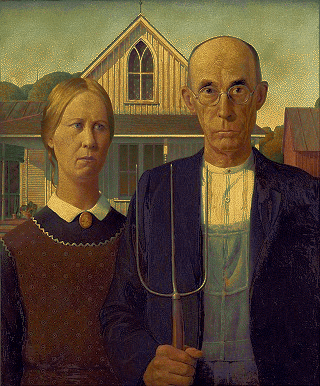

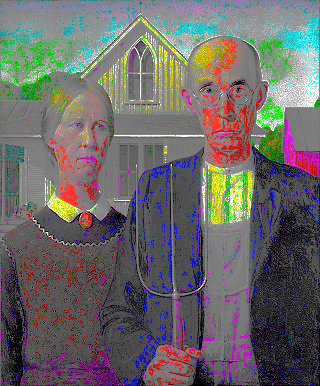

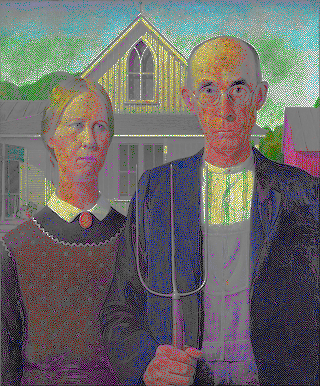

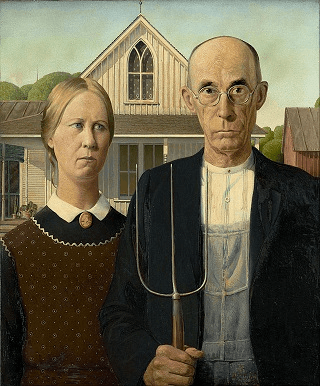

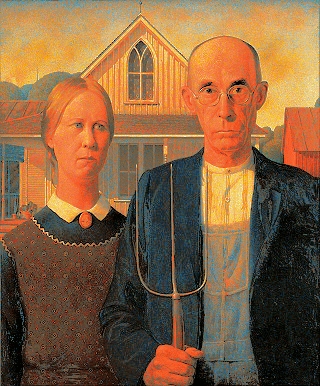

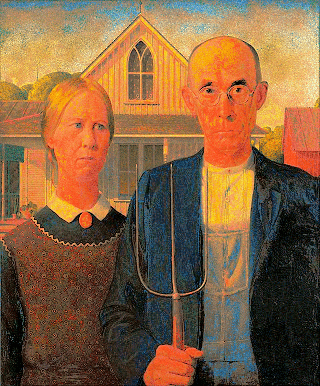

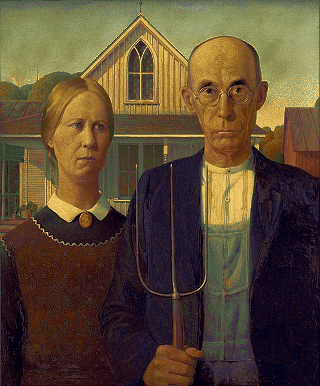

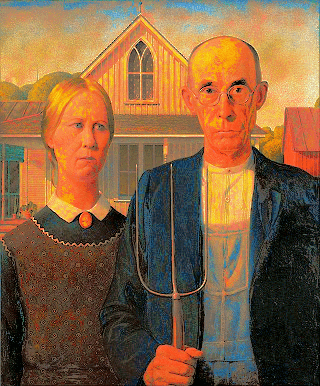

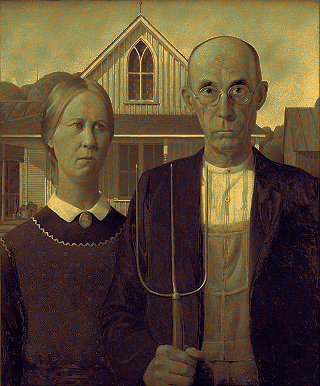

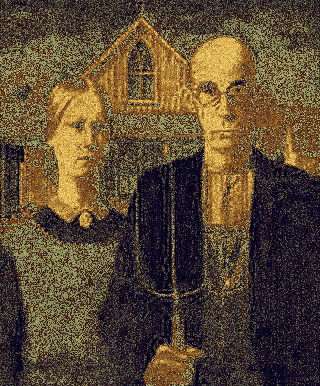

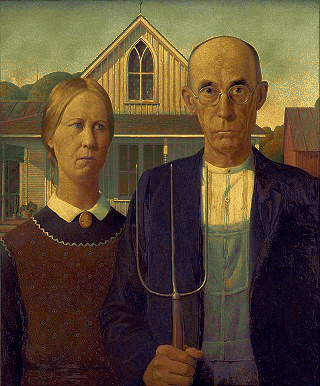

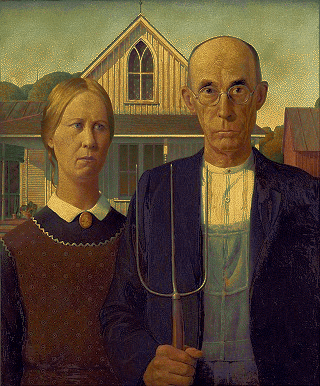

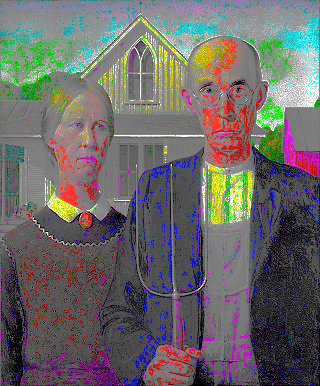

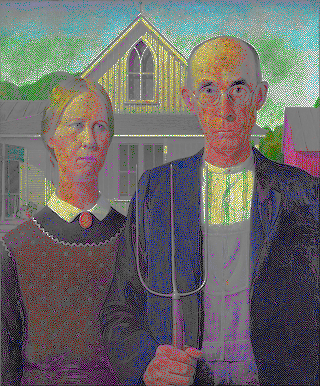

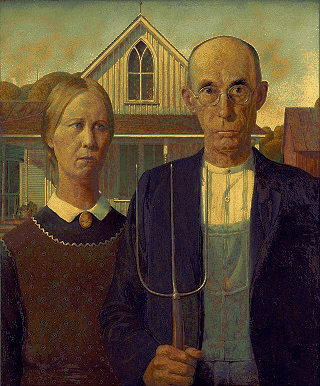

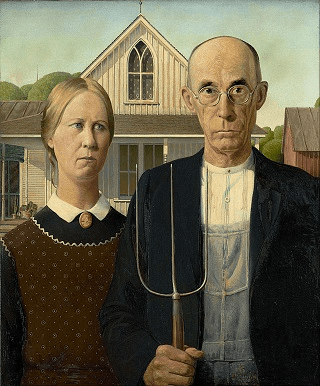

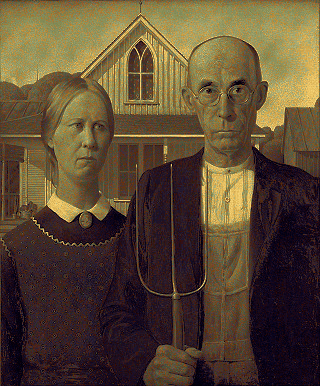

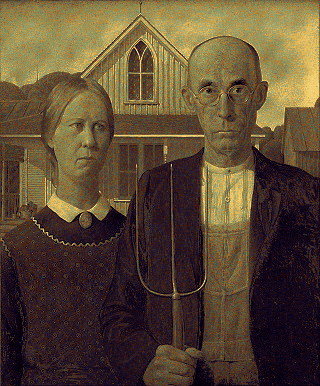

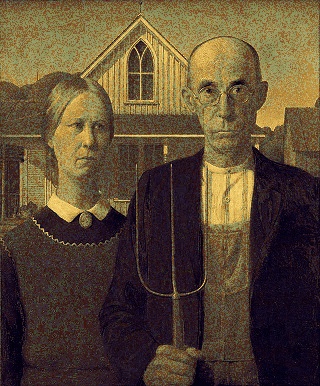

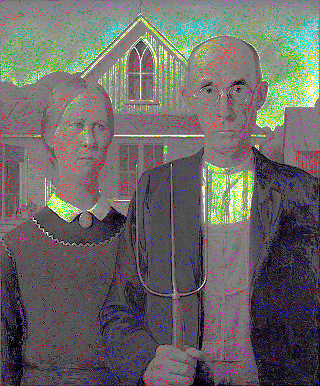

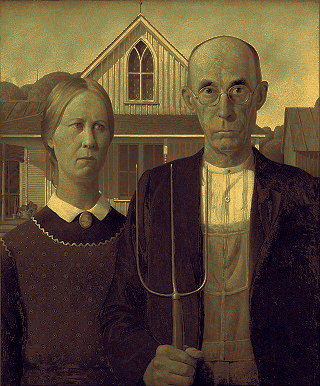

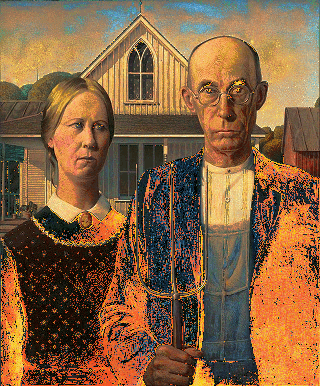

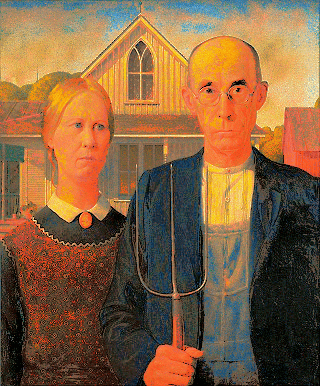

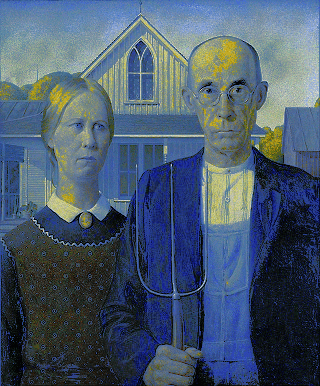

American Gothic palette

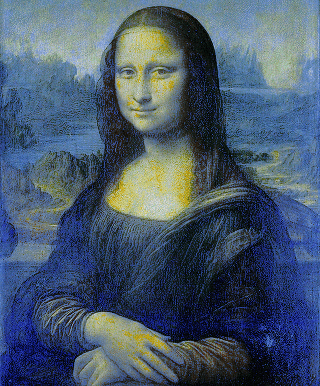

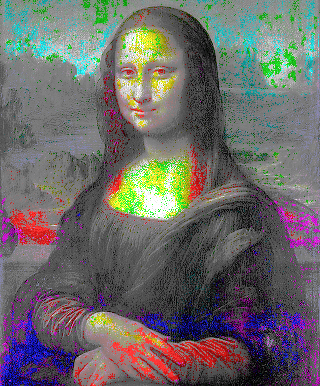

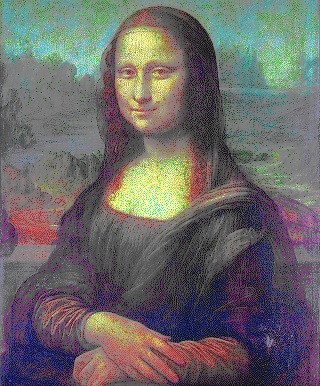

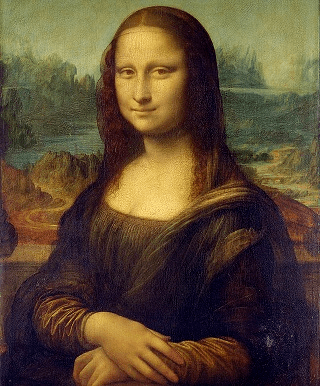

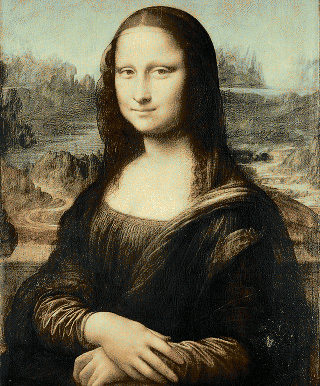

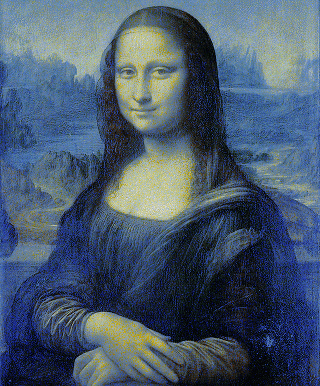

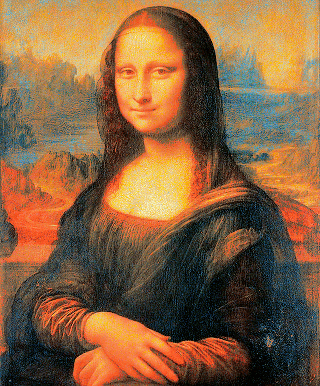

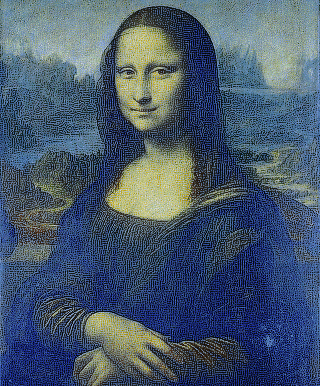

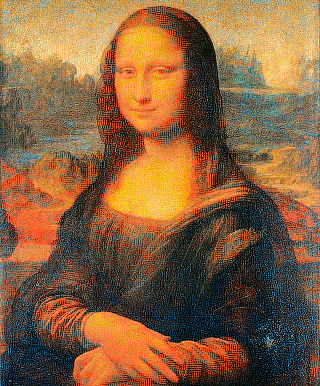

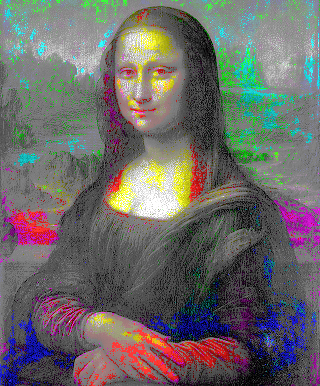

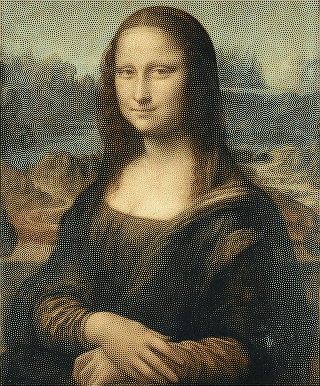

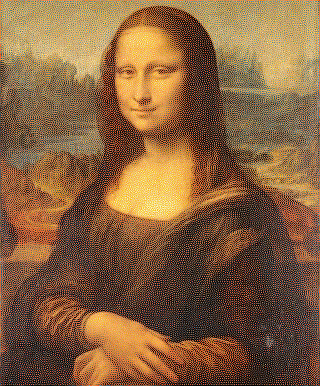

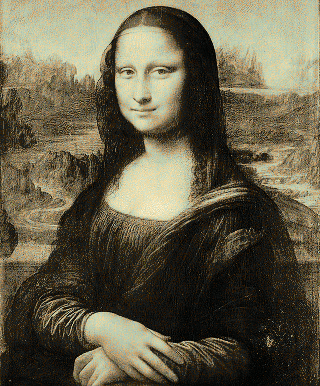

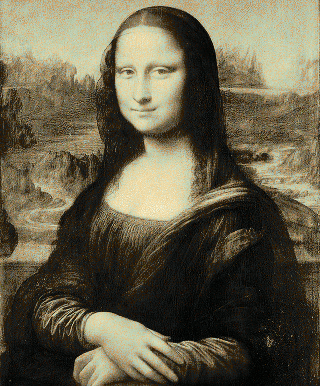

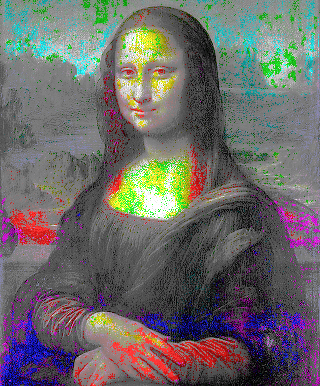

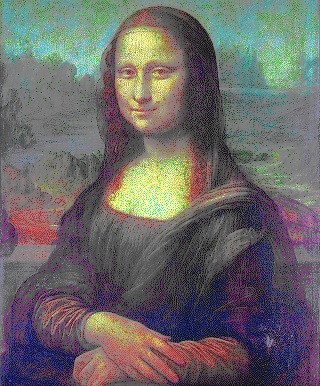

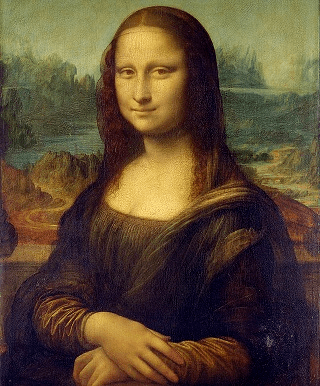

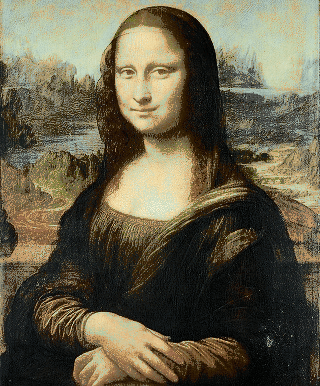

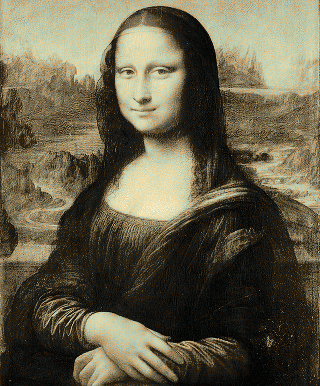

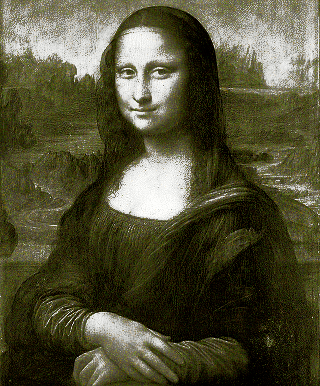

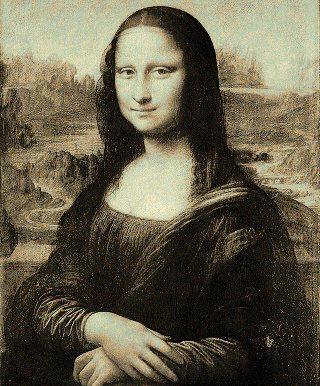

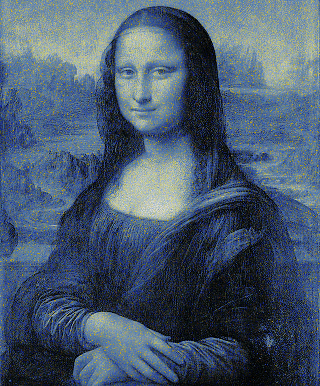

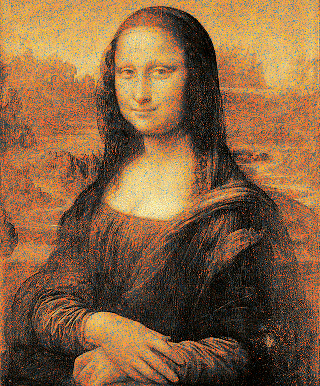

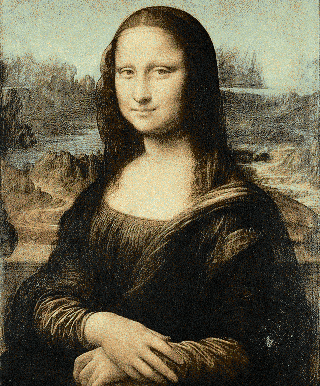

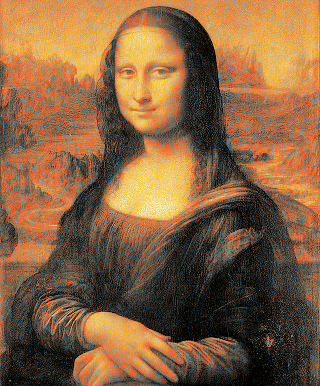

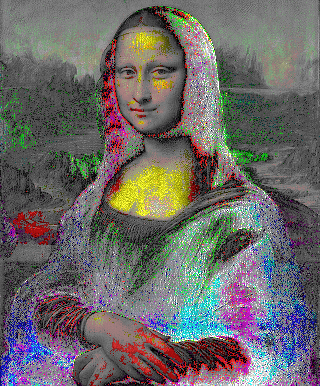

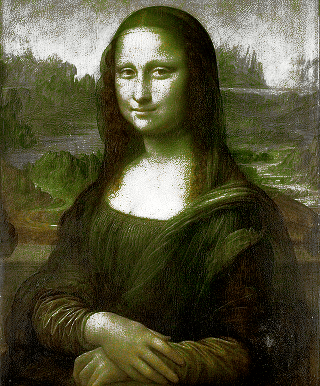

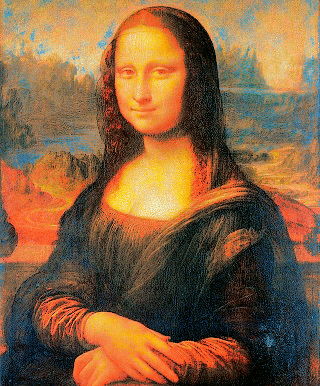

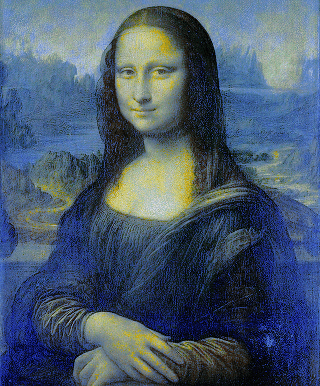

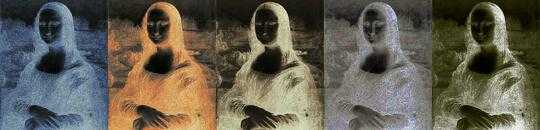

Mona Lisa palette

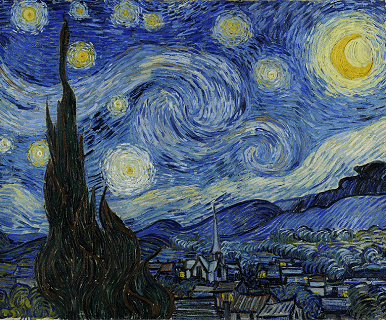

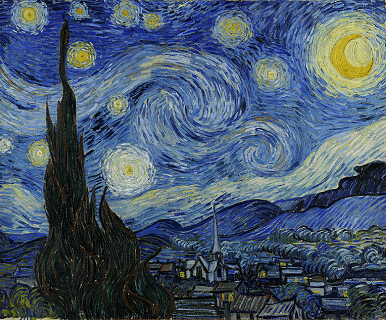

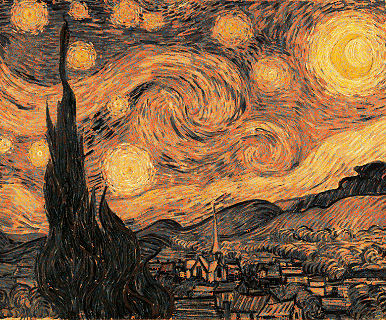

Starry Night palette

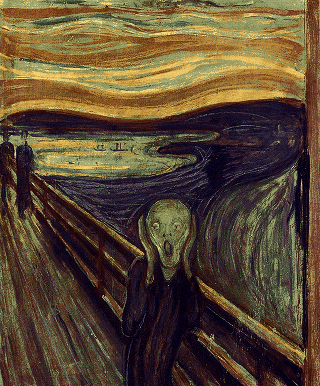

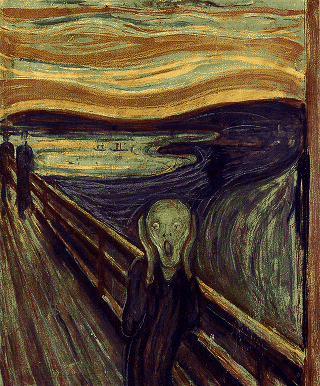

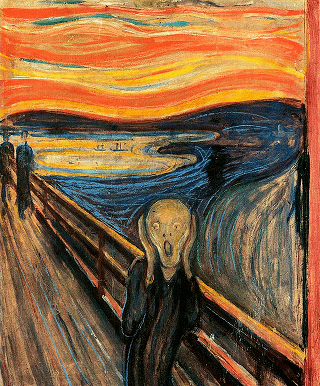

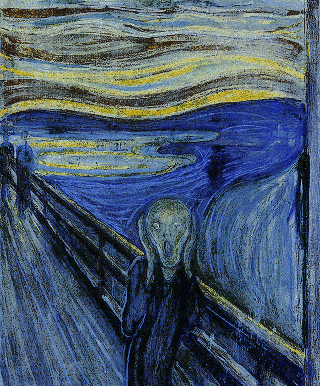

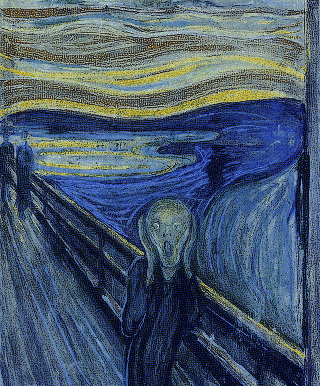

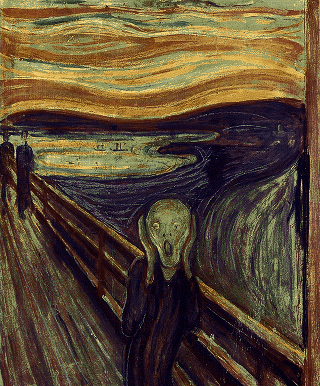

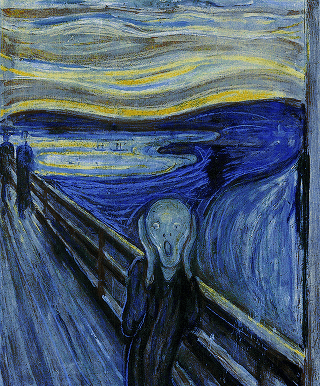

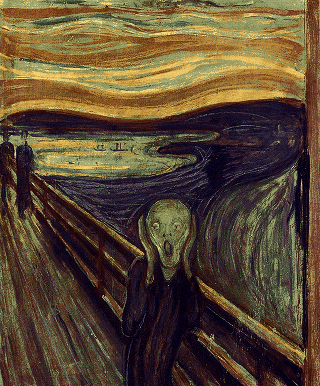

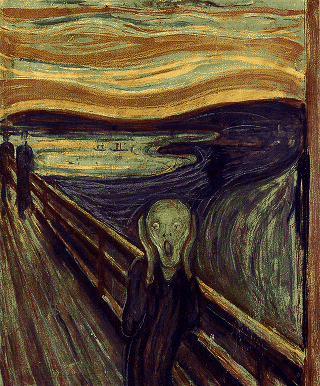

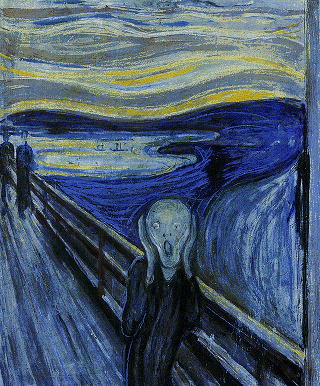

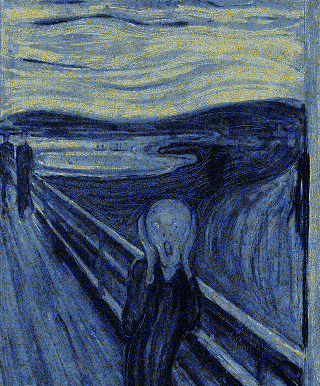

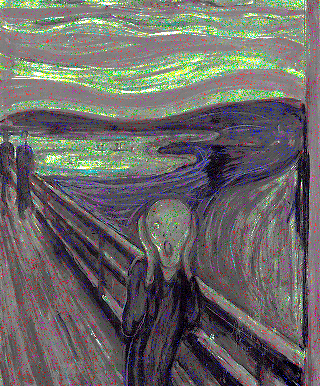

Scream palette

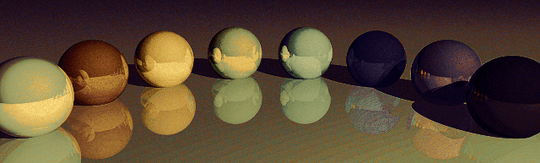

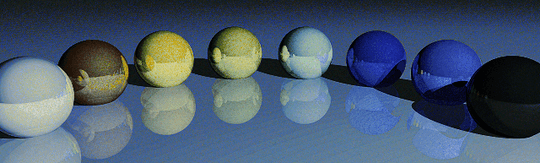

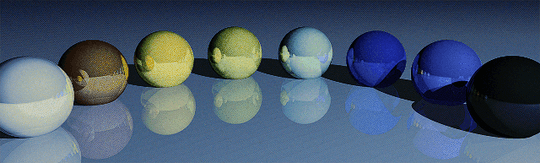

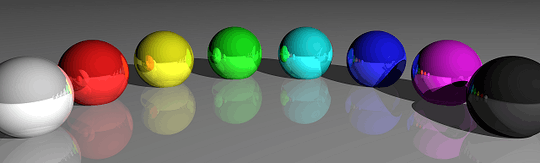

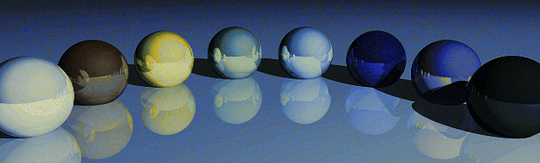

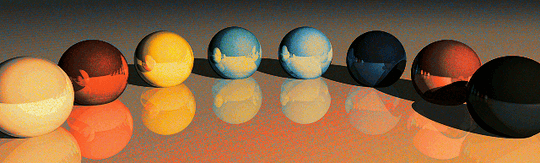

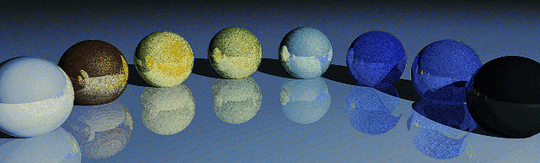

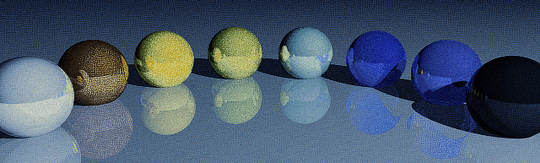

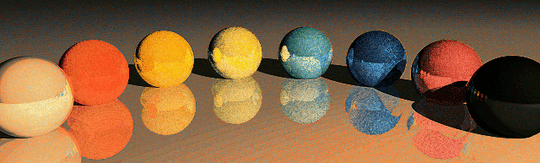

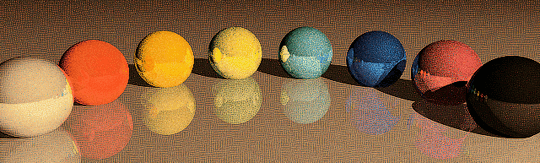

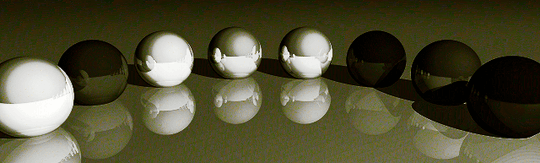

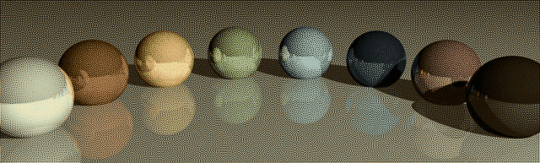

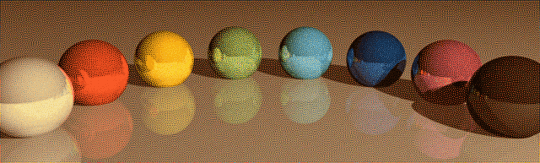

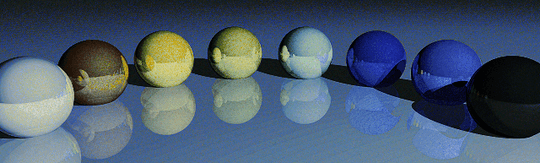

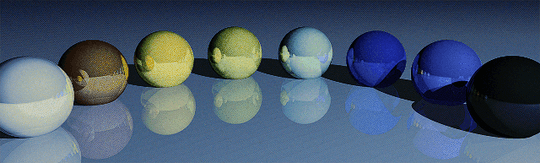

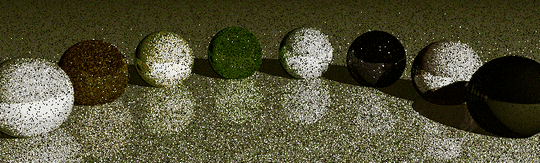

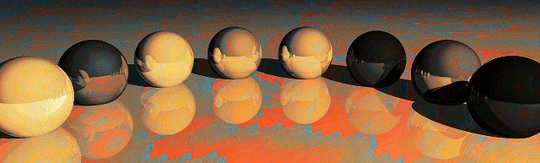

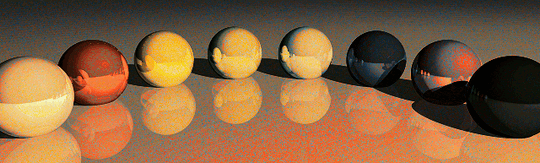

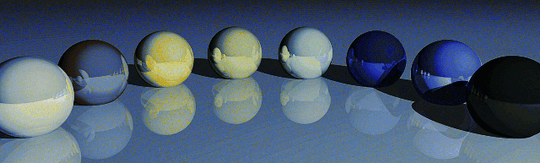

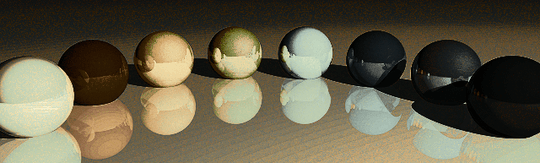

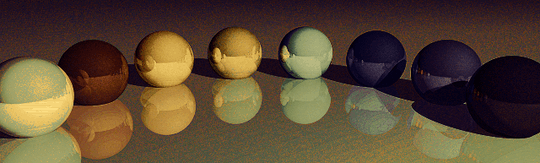

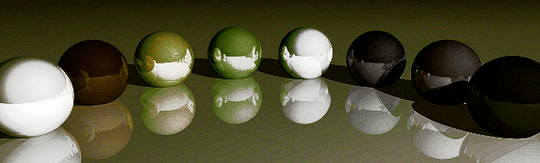

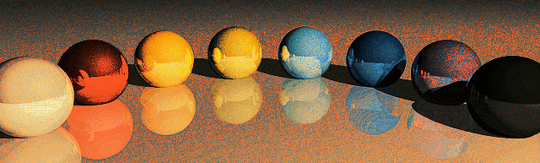

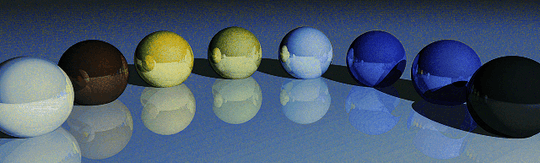

Spheres palette

Mustang (Camaro palette)

Camaro (Mustang palette)

(

(

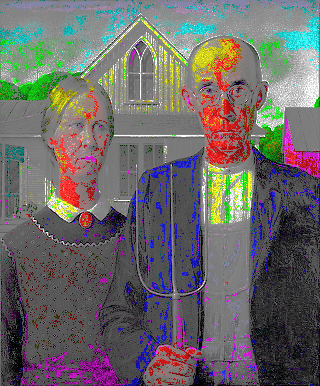

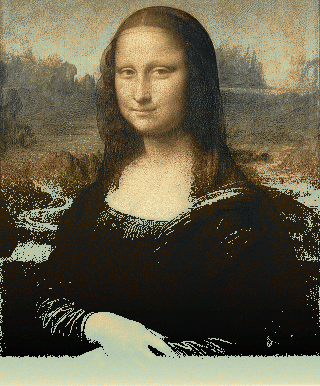

American Gothic using Mona Lisa palette

American Gothic using Mona Lisa palette

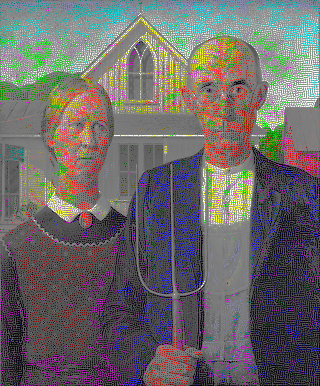

American Gothic using Rainbow palette

American Gothic using Rainbow palette

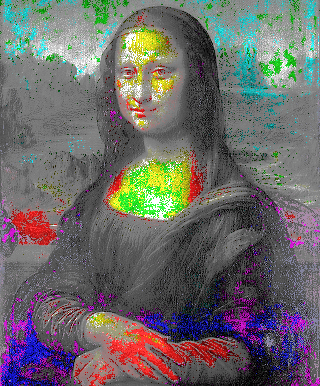

Mona Lisa using Scream palette

Mona Lisa using Scream palette

Mona Lisa using Rainbow palette

Mona Lisa using Rainbow palette

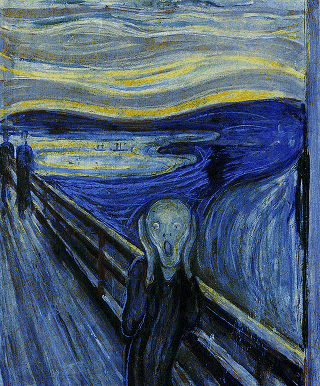

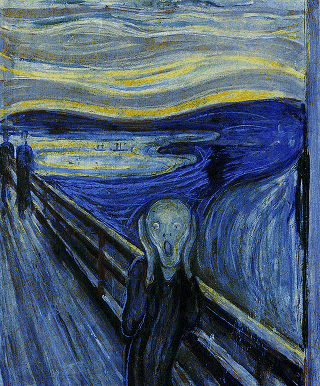

Scream using Starry Night palette

Scream using Starry Night palette

5

Related: https://github.com/jcjohnson/neural-style

– Vi. – 2015-11-16T16:03:18.827Apparently vice.com published an article about this

– aditsu quit because SE is EVIL – 2018-01-24T09:27:21.637Must the process be entirely unguided, or is it permitted to give the program hints about the parts of the image which are most important (e.g. Lisa's face)? – Peter Taylor – 2014-07-09T07:13:52.110

@Quincunx I do know that only one is a png, but I just made sure that my script only considers the RGB values in each image. The strings 'palette.png' and 'copy.png' were just placeholders since PIL will load most image types. If it helps anyone I could put all the images in the same format, say bmp. – Calvin's Hobbies – 2014-07-09T07:30:06.547

@PeterTaylor I'd say that you should not have to manually tell the program what parts of the image to put more detail into. That goes against the idea of your algorithm working in the general case just given two images. You may definitely do this programmatically though. (I'd still love to see your output even if you stick with manual detailing!) – Calvin's Hobbies – 2014-07-09T07:39:11.767

22To increase the hits on your question you may wish to consider entitling it, "American Gothic in the palette of Mona Lisa: Rearrange the pixels" – DavidC – 2014-07-09T12:39:54.103

I always referred to this as "pixel shuffling" https://www.flickr.com/photos/mjswart/295731754/in/photolist-sjEdW-s8GKm-5YQXXJ-4xEMjD-4vUyGR-swN8k-swafc-suDjy

– Michael J Swart – 2014-07-09T14:03:30.26714Hi, I just want to congratulate you on this original challenge! Very refreshing and interesting. – bolov – 2014-07-09T15:26:00.297

Thanks for the support @bolov. I just added a couple more images for some more variety. – Calvin's Hobbies – 2014-07-09T17:13:43.323

@Calvin'sHobbies I would definitely do this challenge, if I knew how to work with images :P – qwr – 2014-07-09T17:49:29.203

1@qwr It is not that difficult - can you tell me a language you know? I am pretty sure you can easily edit images in most languages - and its great fun=) PS: Calvin'sHobbies you are making sure you keep us busy by adding new images, eh! – flawr – 2014-07-09T20:46:50.917

@flawr I mostly do python. The reason I was hesitant to do this problem is that I wasn't sure that simple luminance matching would work; I wanted maybe edge detection but that's a hard task. – qwr – 2014-07-09T21:11:14.270

@qwr have a look at the codes here, they did similar things (but I do not know how easy/difficult): https://codegolf.stackexchange.com/questions/11141/encode-images-into-tweets-extreme-image-compression-edition

– flawr – 2014-07-09T21:26:08.6836I'm glad this isn't a [code-golf]. – Ming-Tang – 2014-07-10T00:47:23.497

1Love the animation! :D – eithed – 2014-07-10T06:43:17.733

1Fantastic job with the gif! – millinon – 2014-07-10T21:05:31.107

The bitpwner's AG -> aditsu's is bizarre one. Can't believe that they indeed share the same colours. I guess aditsu's approach, in case of sorting takes into account surrounding pixels as well? Will take a look at that when I've got a moment – eithed – 2014-07-11T11:30:02.713

Why don't you edit your question and include your code here, instead of on a separate site, to stop link rot? – Michael A – 2014-07-11T13:35:03.723

@Ben I just included a shorter version of the validity checker but the animation script is quite long and is somewhat of an afterthought on an question that's already getting too long. – Calvin's Hobbies – 2014-07-11T18:36:04.867

So how long would it take to brute force all combinations of the pixels, and choose the picture with the shortest averaged pixel distance? – Cruncher – 2014-07-11T18:37:50.413

http://gif-explode.com/?explode=http://i.stack.imgur.com/GEeS4.gif how come the frames look wrong? I was interested about the rotating image example. But the last frame when decomposing it is simply incorrect. – Cruncher – 2014-07-11T18:46:14.547

@Cruncher I know. The original images aren't like that, it's just a gif artifact. I may make an hd video of these animations soon so they can be viewed properly. – Calvin's Hobbies – 2014-07-11T18:51:50.430

1

@Calvin'sHobbies http://jsfiddle.net/eithe/J7jEk/31/ - boredom got to me :D

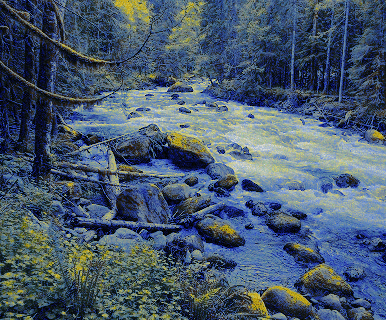

– eithed – 2014-07-11T21:25:22.167@eithedog Very nice! If you want I actually scaled down my tree, the original is at http://i.stack.imgur.com/PjUCP.png.

– Calvin's Hobbies – 2014-07-11T21:55:02.443@Calvin'sHobbies - cheers! now with the originals I'll have to figure out why the hell is red moving :D (at least black stays in place) – eithed – 2014-07-11T22:32:48.577

While doing the 90° turn with the tree, the borders should remain mainly untouched. So you are not using the travel distance optimized version, do you? – Leif – 2014-07-12T16:02:56.877

14My mobile data limit gets a terrible burn every time I visit this page. – Vectorized – 2014-07-12T16:50:01.920

1@Calvin'sHobbies When you make HD videos, please make the pixels twice or thrice the size and let them move very slowly and only one normal pixel at a time. This should make it a little bit easier to distinguish the pixels and see them move. It's naturally impossible with normal sized pixels that cannot move less than one pixel at a time. – Leif – 2014-07-12T18:40:44.280

This is well on its way to becoming the most popular question on the site. Congratulations! – Joe Z. – 2014-07-13T00:49:49.060

if you're editing the pixels in a 2D array moving on an expanding circular track from the center, you get a frame-like border. it's not perfect but hides the problem american gothic to mona lisa

– bebe – 2014-07-13T19:16:10.100For the record, the optimal solution can be computed in O(N^3) using the Hungarian algorithm. Of course, it's not very practical for N = 295 x 357 However, if you enforce a constraint that a point only be sent to one of its 10 closest neighbors, it starts to become tractable. – Arthur B – 2014-07-11T16:26:02.957

The rainbow Mona Lisa one runs much slower than the rest and the animation is really jerky. I think you might want to either edit the time between frames or create more frames. – trlkly – 2014-07-14T03:39:59.693

@trlkly I made it slow so people could see the process better. Adding a lot more frames would put it over the 2MB limit. – Calvin's Hobbies – 2014-07-14T04:10:25.753

@Calvin'sHobbies Then may I at least suggest mentioning that in the Question itself? Like maybe changing the caption above to say "Here is aditsu's rainbow Mona Lisa, running in slow motion so you can see the details." I actually originally thought something was wrong on my end. Also, too bad you can't add any more frames to make it smoother, though. – trlkly – 2014-07-14T04:31:19.097

Good job. I knew there was a shorter wording, but my brain was fried at the time. BTW, did you know that this is the first Question on all Stack Exchange that I've voted up? – trlkly – 2014-07-14T23:48:36.463

The solution keep getting better and better. Awesome – bolov – 2014-07-16T09:41:32.857

Thanks everyone for the support and especially for the continually various and interesting answers. In case anyone was wondering I've kind of stalled on making an animations video and by now there's a pretty small chance it'll happen, sorry. :/ – Calvin's Hobbies – 2014-07-16T10:17:45.887